03 Using R syntax

Florian Berding, Yuliia Tykhonova, Julia Pargmann, Andreas Slopinski, Elisabeth Riebenbauer, Karin Rebmann

Source:vignettes/classification_tasks.Rmd

classification_tasks.Rmd1 Introduction and Overview

1.1 Preface

This vignette introduces the package aifeducation and its usage with R syntax. For users who are unfamiliar with R or those who do not have coding skills in relevant languages (e.g. python), we recommend to start with the graphical user interface AI for Education - Studio which is described in the vignette 02 Using the graphical user interface Aifeducation - Studio.

We assume that aifeducation is installed as described in vignette 01 Get Started. The introduction starts with a brief explanation of basic concepts, which are necessary to work with this package.

1.2 Basic Concepts

In the educational and social sciences, assigning scientific concepts to an observation is an important task that allows researchers to understand an observation, to generate new insights, and to derive recommendations for research and practice.

In educational science, several areas deal with this kind of task. For example, diagnosing students’ characteristics is an important aspect of a teachers’ profession and necessary to understand and promote learning. Another example is the use of learning analytics, where data about students is used to provide learning environments adapted to their individual needs. On another level, educational institutions such as schools and universities can use this information for data-driven performance decisions (Laurusson & White 2014) as well as where and how to improve it. In any case, a real-world observation is aligned with scientific models to use scientific knowledge as a technology for improved learning and instruction.

Supervised machine learning is one concept that allows a link between real-world observations and existing scientific models and theories (Berding et al. 2022). For educational science, this is a great advantage because it allows researchers to use the existing knowledge and insights to apply AI. The drawback of this approach is that the training of AI requires both information about the real world observations and information on the corresponding alignment with scientific models and theories.

A valuable source of data in educational science are written texts, since textual data can be found almost everywhere in the realm of learning and teaching (Berding et al. 2022). For example, teachers often require students to solve a task which they provide in a written form. Students have to create a solution for the tasks which they often document with a short written essay or a presentation. This data can be used to analyze learning and teaching. Teachers’ written tasks for their students may provide insights into the quality of instruction while students’ solutions may provide insights into their learning outcomes and prerequisites.

AI can be a helpful assistant in analyzing textual data since the analysis of textual data is a challenging and time-consuming task for humans.

Please note that an introduction to content analysis, natural language processing or machine learning is beyond the scope of this vignette. If you would like to learn more, please refer to the cited literature.

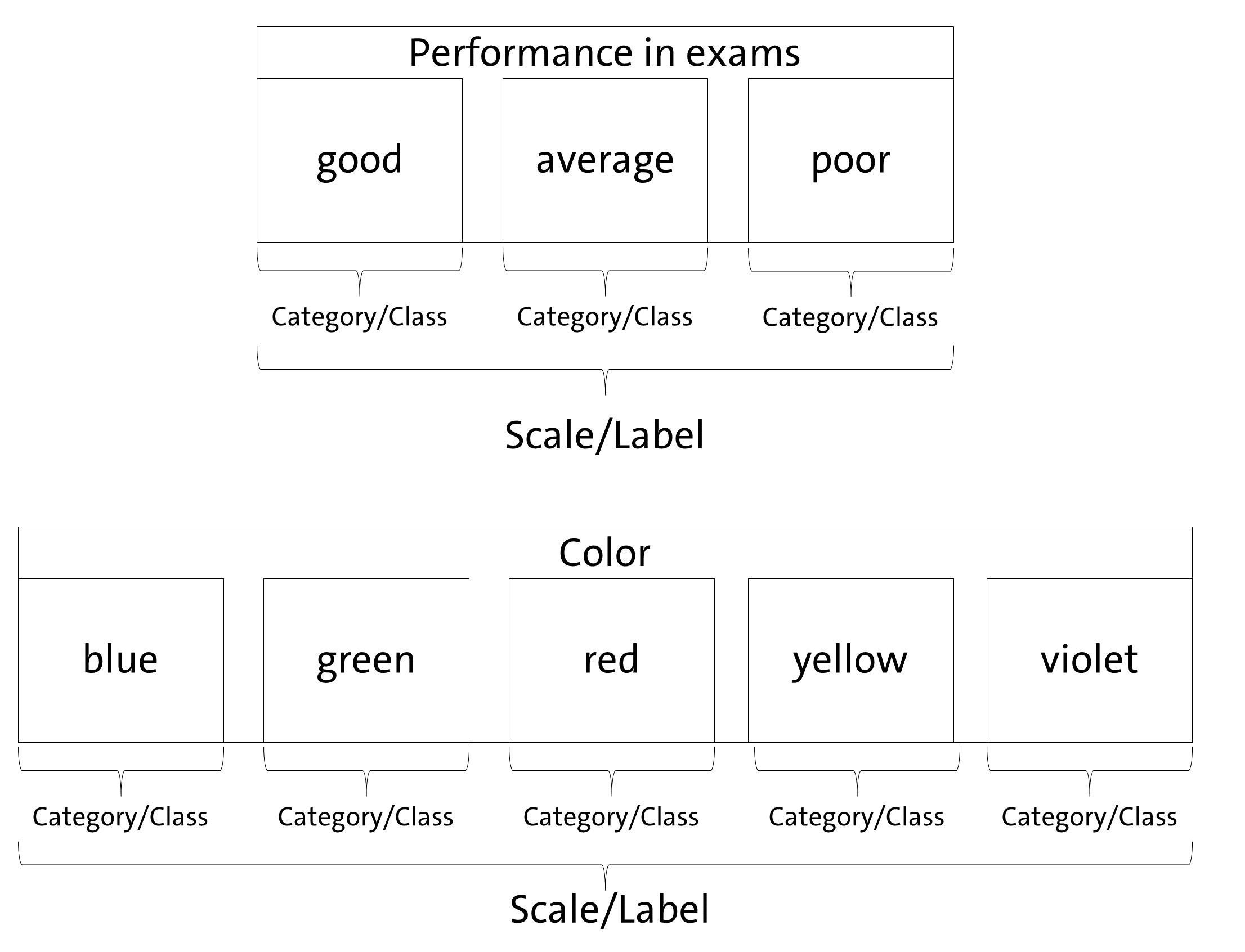

Before we start, it is necessary to introduce a definition of our understanding of some basic concepts, since applying AI to educational contexts means to combine the knowledge of different scientific disciplines using different, sometimes overlapping, concepts. Even within a single research area, concepts are not unified. Figure 1 illustrates this package’s understanding.

Since aifeducation looks at the application of AI for classification tasks from the perspective of the empirical method of content analysis, there is some overlapping between the concepts of content analysis and machine learning. In content analysis, a phenomenon like performance or colors can be described as a scale/dimension which is made up by several categories (e.g. Schreier 2012, pp. 59). In our example, an exam’s performance (scale/dimension) could be “good”, “average” or “poor”. In terms of colors (scale/dimension) categories could be “blue”, “green”, etc. Machine learning literature uses other words to describe this kind of data. In machine learning, “scale” and “dimension” correspond to the term “label” while “categories” refer to the term “classes” (Chollet, Kalinowski & Allaire 2022, p. 114).

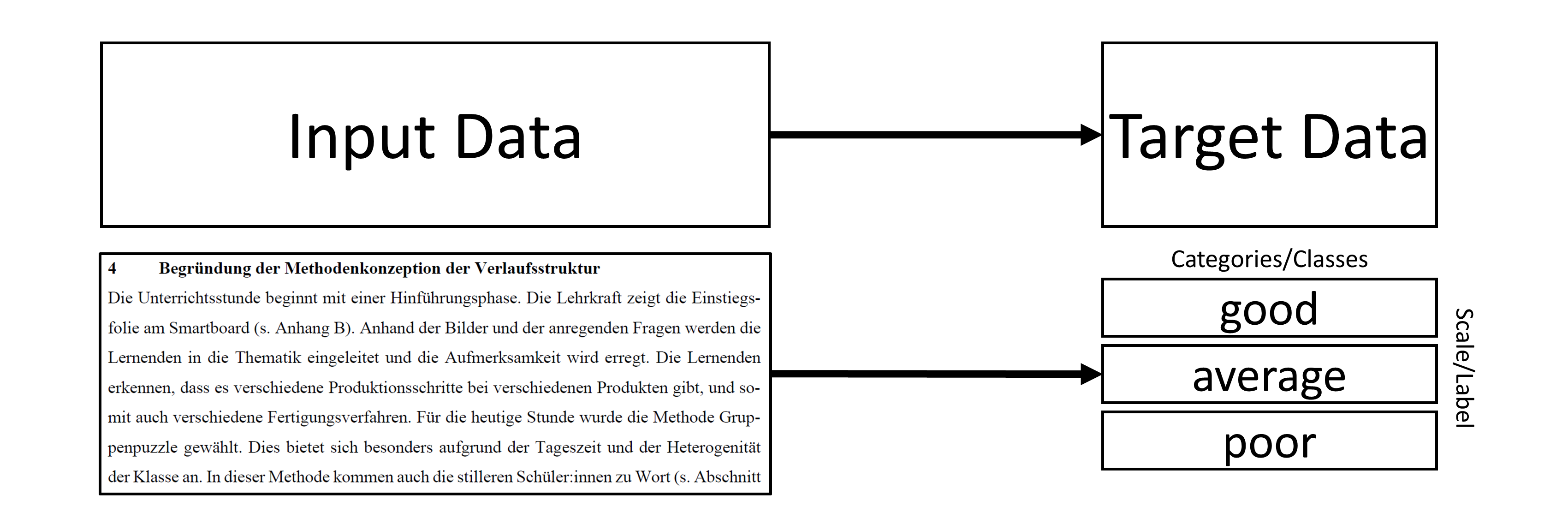

With these clarifications, classification means that a text is assigned to the correct category of a scale or, respectively, that the text is labeled with the correct class. As Figure 2 illustrates, two kinds of data are necessary to train an AI to classify text in line with supervised machine learning principles.

By providing AI with both the textual data as input data and the corresponding information about the class as target data, AI can learn which texts imply a specific class or category. In the above exam example, AI can learn which texts imply a “good”, an “average” or a “poor” judgment. After training, AI can be applied to new texts and predict the most likely class of every new text. The generated class can be used for further statistical analysis or to derive recommendations about learning and teaching.

In use cases as described in this vignette, AI has to “understand” natural language: „Natural language processing is an area of research in computer science and artificial intelligence (AI) concerned with processing natural languages such as English and Mandarin. This processing generally involves translating natural language into data (numbers) that a computer can use to learn about the world. (…)” (Lane , Howard & Hapke 2019, p. 4)

Thus, the first step is to transform raw texts into a a form that is usable for a computer, hence raw texts must be transformed into numbers. In modern approaches, this is usually done through word embeddings. Campesato (2021, p. 102) describes them as “the collective name for a set of language modeling and feature learning techniques (…) where words or phrases from the vocabulary are mapped to vectors of real numbers.” The definition of a word vector is similar: „Word vectors represent the semantic meaning of words as vectors in the context of the training corpus.” (Lane, Howard & Hapke 2019, p. 191). In the next step, the words or text embeddings can be used as input data and the labels as target data when training AI to classify a text.

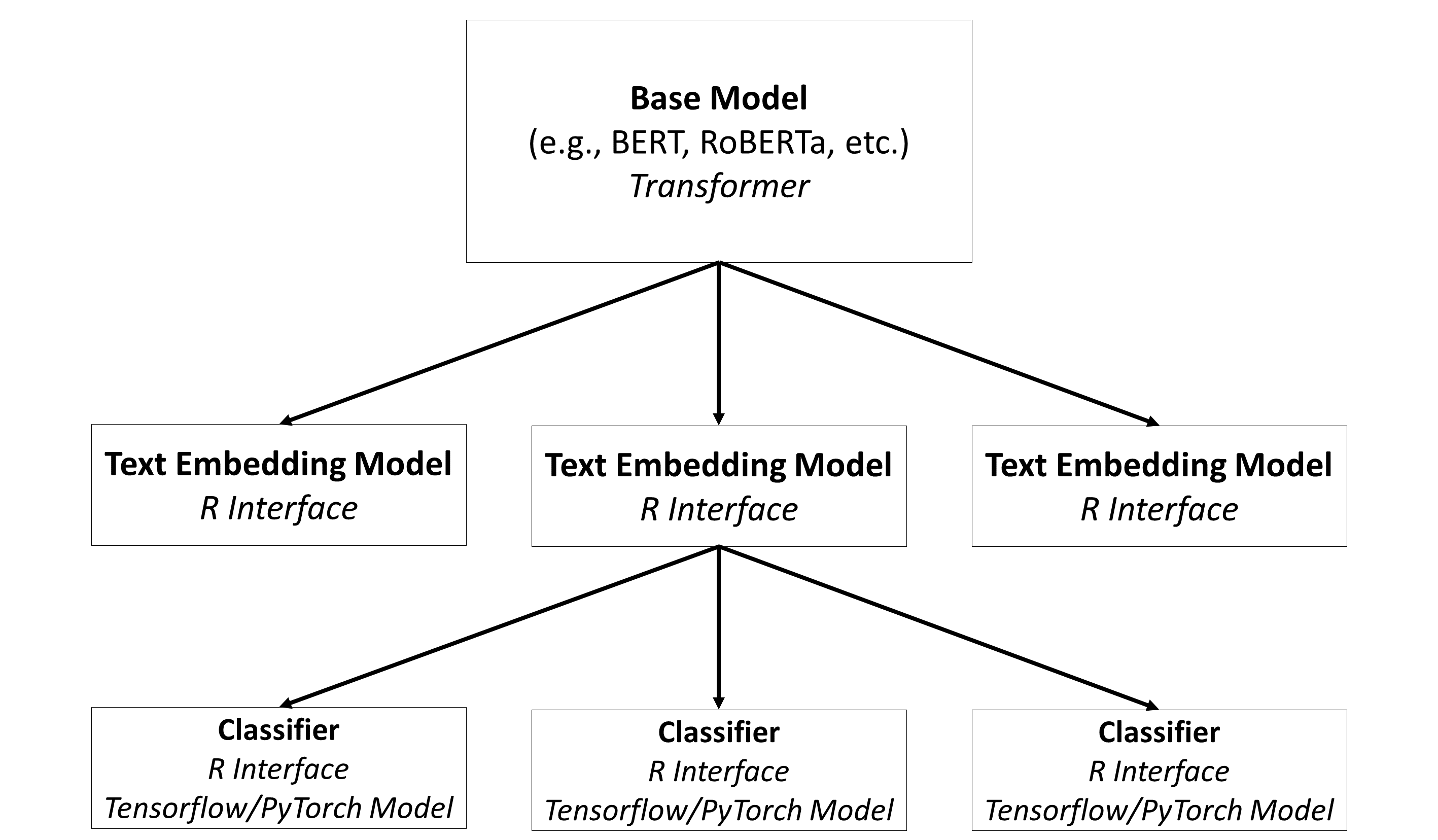

In aifeducation, these steps are covered with three different types of models, as shown in Figure 3.

Base Models: The base models contain the capacities to understand natural language. In general, these are transformers such as BERT, RoBERTa, etc. A huge number of pre-trained models can be found on Hugging Face.

Text Embedding Models: The modes are built on top of base models and store directions on how to use these base models for converting raw texts into sequences of numbers. Please note that the same base model can be used to create different text embedding models.

Classifiers: Classifiers are used on top of a text embedding model. They are used to classify a text into categories/classes based on the numeric representation provided by the corresponding text embedding model. Please note that a text embedding model can be used to create different classifiers (e.g. one classifier for colors, one classifier to estimate the quality of a text, etc.).

2 Start Working

2.1 Starting a New Session

Before you can work with aifeducation, you must set up a new

R session. First, you can load aifeducation.

Second, it is necessary that you set up python via ‘reticulate’ and

chose the environment where all necessary python libraries are

available. In case you installed python as suggested in vignette 01 Get started you may start a new session

like this:

library(aifeducation)

prepare_session()

#> Python is already initalized with the virtual environment 'aifeducation'.

#> Try to use this environment.

#> Detected OS: windows

#> Checking python packages. This can take a moment.

#> All necessary python packages are available.

#> python: 3.12

#> torch: 2.9.0+cu129

#> pyarrow: 21.0.0

#> transformers: 4.57.1

#> tokenizers: 0.22.1

#> pandas: 2.3.3

#> datasets: 3.6.0

#> calflops: 0.3.2

#> codecarbon: 3.0.7

#> safetensors: 0.6.2

#> torcheval: 0.0.7

#> accelerate: 1.11.0

#> numpy: 2.3.4

#> GPU Acceleration: TRUE

#> Location for Temporary Files:C:\Users\User\AppData\Local\Temp\RtmpmGHm1E/r_aifeducationPlease remember: Every time you start a new session in R, you have to load the library

aifeducationand to configure python. We recommend to use the functionprepare_sessionbecause it performs all necessary steps for setting up python correctly.

Now you can start your work.

2.2 Data Management

2.2.1 Introduction

In the context of use cases for aifeducation, three different types of data are necessary: raw texts, text embeddings, and target data which represent the categories/classes of a text.

To deal with the first two types and to allow the use of large data sets that may not fit into the memory of your machine, the packages ships with two specialized objects.

The first is LargeDataSetForText. Objects of this class

are used to read raw texts from .txt, .pdf, and .xlsx files and store

them for further computations. The second is

LargeDataSetForTextEmbeddings which are used to store the

text embeddings of raw texts which are generated with

TextEmbeddingModels. We will describe the transformation of

raw texts into text embeddings later.

2.2.2 Raw Texts

The creation of a LargeDataSetForText is necessary if

you would like to create or train a base model or to generate text

embeddings. In case you would like to create such a data set for the

first time you have to create an empty data set first:

raw_texts <- LargeDataSetForText$new()To fill this object with raw texts different methods are available depending on the file type you use for storing raw texts.

.txt files

The first alternative is to store raw texts in .txt files. To use these you have to structure your data in a specific way:

- Create a main folder for storing your data.

- Store every raw text/document into a single .txt file into its own folder within the main folder. In every folder there should be only one file for a raw text/document.

- Add an additional .txt file to the folder named

bib_entry.txt. This file contains bibliographic information for the raw text. - Add an additional .txt file to the folder named

license.txtwhich contains a short statement for the license of the text such as “CC BY”. - Add an additional .txt file to the folder named

url_license.txtwhich contains the url/link to the license’s text such as “https://creativecommons.org/licenses/by/4.0/”. - Add an additional .txt file to the folder named

text_license.txtwhich contains the full license in raw texts. - Add an additional .txt file to the folder named

url_source.txtwhich contains the url/link to the text file in the internet.

Applying these rules may result in a data structure as follows:

- Folder “main folder”

- Folder Text A

- text_a.txt

- bib_entry.txt

- license.txt

- url_license.txt

- text_license.txt

- url_source.txt

- Folder Text B

- text_b.txt

- bib_entry.txt

- license.txt

- url_license.txt

- text_license.txt

- url_source.txt

- Folder Text C

- text_C.txt

- bib_entry.txt

- license.txt

- url_license.txt

- text_license.txt

- url_source.txt

- Folder Text A

Now you can call the method add_from_files_txt by

passing the path to the directory of the main folder to

dir_path.

raw_texts$add_from_files_txt(

dir_path = "main folder",

clean_text = TRUE

)The data set will now read all the raw texts in the main folder and

will assign every text the corresponding bib entry, license, etc. Please

note that adding a bib_entry.txt, license.txt,

url_license.txt, text_license.txt, and

url_soruce.text to every folder is optional. If there is no

such file in the corresponding folder, there will be an empty entry in

the data set. However, against the backdrop of the European AI Act, we

recommend to provide both the license and bibliographic information to

make the documentation of your models more straightforward. Furthermore,

some licenses such as those provided by Creative Commons require

statements about the creators, a copyright note, a URL or link to the

source material (if possible), the license of the material and a URL or

link to the license’s text on the internet or the license text itself.

Please check the licenses of the material you are using for the

requirements.

.pdf files

The second alternative is to use .pdf files as a source for raw texts. Here, the necessary structure is similar to .txt files:

- Create a main folder for storing your data.

- Store every raw text/document into a single .pdf file into its own folder within the main folder. In every folder there should be only one file for a raw text/document.

- Add an additional .txt file to the folder named

bib_entry.txt. This file contains bibliographic information for the raw text. - Add an additional .txt file to the folder named

license.txtwhich contains a short statement for the license of the text such as “CC BY”. - Add an additional .txt file to the folder named

url_license.txtwhich contains the URL/link to the license’s text such as “https://creativecommons.org/licenses/by/4.0/”. - Add an additional .txt file to the folder named

text_license.txtwhich contains the full license in raw texts. - Add an additional .txt file to the folder named

url_source.txtwhich contains the url/link to the text file in the internet.

Applying these rules may result in a data structure as follows:

- Folder “main folder”

- Folder Text A

- text_a.pdf

- bib_entry.txt

- license.txt

- url_license.txt

- text_license.txt

- url_source.txt

- Folder Text B

- text_b.pdf

- bib_entry.txt

- license.txt

- url_license.txt

- text_license.txt

- url_source.txt

- Folder Text C

- text_C.pdf

- bib_entry.txt

- license.txt

- url_license.txt

- text_license.txt

- url_source.txt

- Folder Text A

Please not that all files except the text file must be .txt, not .pdf.

Now you can call the method add_from_files_pdf by

passing the path to the directory of the main folder to

dir_path.

raw_texts$add_from_files_pdf(

dir_path = "main folder",

clean_text = TRUE

)As stated above, bib_entry.txt,

license.txt, url_license.txt,

text_license.txt, and url_soruce.text are

optional.

.xlsx files

The third alternative is to store the raw texts into .xlsx files. This alternative is useful if you have many small raw texts. For raw texts that are very large such as books or papers we recommend to store them as .txt or .pdf files.

In order to add raw texts from .xlsx files, the files need a special structure:

- Create a main folder for storing all .xlsx files you would like to read.

- All .xlsx files must contain the names of the columns in the first row and the names must be identical for each column across all .xslx files you would like to read.

- Every .xslx files must contain a column storing the text ID and must contain a column storing the raw text. Every text must have a unique ID across all .xlsx files.

- Every .xslx file can contain an additional column for the bib entry.

- Every .xslx file can contain an additional column for the license.

- Every .xslx file can contain an additional column for the license’s URL.

- Every .xslx file can contain an additional column for the license’s text.

- Every .xslx file can contain an additional column for the source’s URL.

Your .xlsx file may look like

| id | text | bib | license | url_license | text_license | url_source |

|---|---|---|---|---|---|---|

| z3 | This is an example. | Author (2019) | CC BY | Example URL | Text | Example URL |

| a3 | This is a second example. | Author (2022) | CC BY | Example URL | Text | Example URL |

| … | … | … | … |

Now you can call the method add_from_files_xlsx by

passing the path to the directory of the main folder to

dir_path. Please do not forget to specify the column names

for ID and text as well as for the other columns.

raw_texts$add_from_files_xlsx(

dir_path = "main folder",

id_column = "id",

text_column = "text",

bib_entry_column = "bib_entry",

license_column = "license",

url_license_column = "url_license",

text_license_column = "text_license",

url_source_column = "url_source"

)Cleant text

For .txt and .pdf files you can set the argument

clean_text=TRUE. This requests an algorithm that should

pre-process the raw texts and applies the following modifications:

- Some special symbols are removed.

- All spaces at the beginning and the end of a row are removed.

- Multiple spaces are reduced to single space.

- All rows with a number from 1 to 999 at the beginning or at the end are removed (header and footer).

- List of content is removed.

- Hyphenation is made undone.

- Line breaks within a paragraph are removed.

- Multiple line breaks are reduced to a single line break.

The aim of these changes is to provide a clean plain text in order to increase the performance and quality of all analyses.

IDs In case of .xlsx files, the texts’ IDs are set to the IDs stored in the corresponding column for ID. In case of .pdf and .txt files, the file names are used as ID (without the file extension).

Please note that a consequence of this is that two files text_01.txt and text_01.pdf have the same ID, which is not allowed. Please ensure that you use unique IDs across file formats.

Saving and loading a data set

Once you have create a LargeDataSetForText you can save

your data to disk by calling the function save_to_disk. In

our example the code would be:

save_to_disk(

object = raw_texts,

dir_path = "examples",

folder_name = "raw_texts"

)The argument object requires the object you would like

to save. In our case this is raw_texts. With

dir_path you specific the location where to save the object

and with folder_name you define the name of the folder that

will be created within that directory. In this folder the data set is

saved.

To load an existing data set, you can call the function

load_from_disk with the directory path where you stored the

data. In our case this would be:

raw_text_dataset <- load_from_disk("examples/raw_texts")Now you can work with your data.

2.2.3 Text Embeddings

The numerical representations of raw texts (called text embeddings)

are stored with objects of class

LargeDataSetForTextEmbeddings. These kinds of data sets are

generated by some models such as TextEmbeddingModels. Thus,

you will never need to create such a data set manually.

However, you will need this kind of data set to train a classifier or

to predict the categories/classes of raw texts. Thus, it may be

advantageous to save already transformed data. You can save and load an

object of this class with the functions save_to_disk and

load_from_disk.

Let us assume that we have a

LargeDataSetForTextEmbeddings called

text_embeddings. Saving this object may look like:

save_to_disk(

object = text_embeddings,

dir_path = "examples",

folder_name = "text_embeddings"

)The data set will be saved at examples/text_embeddings.

Loading this data set may look like:

new_text_embeddings <- load_from_disk("examples/text_embeddings")2.2.4 Target Data

The last data type necessary for working with

aifeducation are the categories/classes of given raw texts.

For this kind of data we currently do not provide a special object. You

just need a named factor storing the

classes/categories for a dimension. It is also important that the names

equal the ID of the corresponding raw texts/text embeddings since

matching the classes/categories to texts is done with the help of these

names.

Saving and loading can be done with R’s functions

save and load.

2.3 Example Data for this Vignette

To illustrate the steps in this vignette, we cannot use data from

educational settings since these data is generally protected by privacy

policies. Therefore, we use a subset of the Standford Movie Review

Dataset provided by Maas et al. (2011) which is part of the package. You

can access the data set with imdb_movie_reviews.

We now have a data set with three columns. The first column contains the raw text, the second contains the rating of the movie (positive or negative), and the third column the ID of the movie review. About 200 reviews imply a positive rating of a movie and about 100 imply a negative rating.

For this tutorial, we modify this data set by setting about 50

positive and 25 negative reviews to NA, indicating that

these reviews are not labeled.

example_data <- imdb_movie_reviews

example_data$label <- as.character(example_data$label)

example_data$label[c(76:100)] <- NA

example_data$label[c(201:250)] <- NA

table(example_data$label)

#>

#> neg pos

#> 75 150We will now create a LargeDataSetForText from this

data.frame. Before we can do this we must ensure that the

data.set has all necessary columns:

colnames(example_data)

#> [1] "text" "label" "id"Now we have to add two columns. For this tutorial we do not add any bibliographic or license information although this is recommended in practice.

example_data$bib_entry <- NA

example_data$license <- NA

colnames(example_data)

#> [1] "text" "label" "id" "bib_entry" "license"Now the data.frame is ready as input for our data set.

The “label” column will not be included.

data_set_reviews_text <- LargeDataSetForText$new()

data_set_reviews_text$add_from_data.frame(example_data)We save the categories/labels within a separate factor.

We will now use this data to show you how to use the different objects and functions in aifeducation.

3 Base Models

3.1 Overview

Base models are the foundation of all further models in aifeducation. At the moment, these are transformer models such modernBERT (Warner et al. 2024), MPNet (Song et al. 2020), BERT (Devlin et al. 2019), RoBERTa (Liu et al. 2019), and Funnel-Transformer (Dai et al. 2020). In general, these models are trained on a large corpus of general texts in the first step. In the next step, the models are fine-tuned to domain-specific texts and/or fine-tuned for specific tasks. Since the creation of base models requires a huge number of texts resulting in high computational time, it is recommended to use pre-trained models. These can be found on Hugging Face. Sometimes, however, it is more straightforward to create a new model to fit a specific purpose. aifeducation supports the option to both create and train/fine-tune base models.

In this chapter we concentrate on creating a new model. The usage of a pre-trained model from Hugging Face is described in chapter 4.

Every transformer model is composed of two parts:

- the tokenizer which splits raw texts into smaller pieces to model a large number of words with a limited, small number of tokens and

- the neural network that is used to model the capabilities for understanding natural language.

3.2 Creation of a Tokenizer

The first stept is to set up a tokenizer.

In aifeducation you can choose between different models. In

this example we use a WordPieceTokenizer. To create a new one we first

call the method new.

tokenizer <- WordPieceTokenizer$new()Now we have an empty tokenizer which we can configure by calling the

corresponding method. The available parameter depend on the kind of

tokenizer. In the case of a WordPieceTokenizer we have only two options.

The first, vocab_size determines how many tokens the

tokenizer should know. The second determines if all words should be

transformed to lower case before tokenization. since we use only a very

small data set for illustrating the usage of the package we set

vocab_size = 200. In real case applications this value

should be larger. You can find more information on this topic in

vignette 04 Model configuration and

training.

tokenizer$configure(

vocab_size = 200,

vocab_do_lower_case = FALSE

)In the next step we have to train the model by calling the method

train. Here we pass a dataset for text to the parameter

text_dataset. This raw text is used to train the model.

In addition, we can set up sustainability tracking of this process to

estimate its ecological impact with help of the python library

codecarbon. Thus, sustain_track is set to

TRUE by default. If you use the sustainability tracker you

must provide the alpha-3 code for the country where your computer is

located (e.g., “CAN”=“Canada”, “DEU”=“Germany”). A list with the codes

can be found on Wikipedia.

The reason is that different countries use different sources and

techniques for generating their energy resulting in a specific impact on

CO2 emissions. For the USA and Canada you can additionally specify a

region by setting sustain_region. Please refer to the

documentation of codecarbon for more information.

tokenizer$train(

text_dataset = data_set_reviews_text,

statistics_max_tokens_length = 512,

sustain_track = TRUE,

sustain_iso_code = "DEU",

sustain_region = NULL,

sustain_interval = 15,

trace = TRUE

)

#> 2025-11-18 07:10:03 Start Sustainability Tracking

#> 2025-11-18 07:10:06 Stop Sustainability TrackingTraining a tokenizer is a very fast process. Thus, even for a huge number of texts the computations should take only some minutes.

Now the model is ready to use. At the beginning we can explore some tokenizer statistics.

# We use t() to improve the readability of the table

t(tokenizer$get_tokenizer_statistics())

#> [,1]

#> step "creation"

#> date "2025-11-18 07:10:06"

#> max_tokens_length "512"

#> n_sequences "653"

#> n_words "90195"

#> n_tokens "257269"

#> mu_t "393.9801"

#> mu_w "138.124"

#> mu_g "2.852364"In this table you can see an estimation of the number of words for the whole training data set, the total number of tokens resulting from the raw texts and the number of sequences if the length of a sequence is limited to the value shown in the column ‘max_token_length’. Furthermore, you can see the three statistics proposed by Kaya and Tantug (2024, p.5). refers to the average number of tokens per sequence, refers to the average number of words per sequence, and refers to the average number of tokens per word. is called the tokenizer granularity rate and describes how many tokens are necessary to represent a single word. This value is very important as a higher value implies that the same text is spitted into a longer sequence of tokens than for a tokenizer with a lower value. Since large language models have a maximum limit on the length of a token sequence this value has in impact on how many words of a text can be processed by a base model.

If you would like to see how the tokenizer works you can call the method ‘encode’.

tokenizer$encode(

raw_text = "This is a good movie.",

token_encodings_only = TRUE,

token_to_int = FALSE

)

#> [[1]]

#> [[1]][[1]]

#> [1] "[CLS]" "T" "##h" "##is" "is" "a" "g" "##o" "##o"

#> [10] "##d" "m" "##o" "##v" "##i" "##e" "." "[SEP]" "[PAD]"

#> [19] "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]"

#> [28] "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]"

#> [37] "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]"

#> [46] "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]"

#> [55] "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]"

#> [64] "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]"

#> [73] "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]"

#> [82] "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]"

#> [91] "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]"

#> [100] "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]"

#> [109] "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]"

#> [118] "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]"

#> [127] "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]"

#> [136] "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]"

#> [145] "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]"

#> [154] "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]"

#> [163] "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]"

#> [172] "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]"

#> [181] "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]"

#> [190] "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]"

#> [199] "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]"

#> [208] "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]"

#> [217] "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]"

#> [226] "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]"

#> [235] "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]"

#> [244] "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]"

#> [253] "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]"

#> [262] "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]"

#> [271] "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]"

#> [280] "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]"

#> [289] "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]"

#> [298] "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]"

#> [307] "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]"

#> [316] "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]"

#> [325] "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]"

#> [334] "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]"

#> [343] "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]"

#> [352] "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]"

#> [361] "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]"

#> [370] "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]"

#> [379] "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]"

#> [388] "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]"

#> [397] "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]"

#> [406] "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]"

#> [415] "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]"

#> [424] "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]"

#> [433] "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]"

#> [442] "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]"

#> [451] "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]"

#> [460] "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]"

#> [469] "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]"

#> [478] "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]"

#> [487] "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]"

#> [496] "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]"

#> [505] "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]" "[PAD]"Here you can see that the tokenizer split the words into several tokens and adds some special tokens.

tokenizer$encode(

raw_text = "This is a good movie.",

token_encodings_only = TRUE,

token_to_int = TRUE

)

#> [[1]]

#> [[1]][[1]]

#> [1] 0 53 114 174 188 61 67 107 107 108 73 107 113 102 97 17 1 3

#> [19] 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3

#> [37] 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3

#> [55] 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3

#> [73] 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3

#> [91] 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3

#> [109] 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3

#> [127] 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3

#> [145] 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3

#> [163] 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3

#> [181] 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3

#> [199] 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3

#> [217] 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3

#> [235] 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3

#> [253] 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3

#> [271] 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3

#> [289] 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3

#> [307] 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3

#> [325] 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3

#> [343] 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3

#> [361] 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3

#> [379] 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3

#> [397] 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3

#> [415] 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3

#> [433] 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3

#> [451] 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3

#> [469] 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3

#> [487] 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3

#> [505] 3 3 3 3 3 3 3 3If you change the parameter token_to_int = TRUE you

receive the same result but instead of the tokens you get the index of

the token in the tokenizer’s vocabulary. For example the id 0 refers to

the token [CLS] in this example.

In the case you have a sequence of id and would like to know which

tokens belong to these numbers you can call the method

decode.

tokenizer$decode(

int_seqence = list(list(c(0, 53, 109, 174, 188, 61, 67, 97, 97, 112, 73, 97, 110, 99, 101, 17, 1))),

to_token = TRUE

)

#> [[1]]

#> [[1]][[1]]

#> [1] "[CLS] T ##k ##is is a g ##e ##e ##u m ##e ##r ##s ##c . [SEP]"If you would like to analyse the ecological impact of training the tokenizer you can get an estimation of energy consumption and CO2-emission.

# We use t() to improve the readability of the table

t(tokenizer$get_sustainability_data("training"))

#> [,1]

#> sustainability_tracked "TRUE"

#> date "2025-11-18 07:10:06"

#> task "Create tokenizer"

#> sustainability_data.duration_sec "1.784966"

#> sustainability_data.co2eq_kg "1.284276e-05"

#> sustainability_data.cpu_energy_kwh "2.106033e-05"

#> sustainability_data.gpu_energy_kwh "7.697228e-06"

#> sustainability_data.ram_energy_kwh "4.954902e-06"

#> sustainability_data.total_energy_kwh "3.371246e-05"

#> technical.tracker "codecarbon"

#> technical.py_package_version "3.0.7"

#> technical.cpu_count "12"

#> technical.cpu_model "12th Gen Intel(R) Core(TM) i5-12400F"

#> technical.gpu_count "1"

#> technical.gpu_model "1 x NVIDIA GeForce RTX 4070"

#> technical.ram_total_size "15.84258"

#> region.country_name "Germany"

#> region.country_iso_code "DEU"

#> region.region NASince tokenizers can be trained very efficient these values will be very low in most cases.

Finally, you can save and load your model. This is done for all

object with the function pair save_to_disk and

load_from_disk.

save_to_disk(

object = tokenizer,

dir_path = "examples",

folder_name = "example_tokenizer"

)

tokenizer <- load_from_disk("examples/example_tokenizer")Now you are ready to create a base model.

3.3 Creating a BaseModel

At the beginning you can choose between the different supported

transformer architectures. Depending on the architecture, you have

different options determining the shape of your neural network. For this

vignette we use a BERT (Devlin et al. 2019) model which can be created

with the function aife_transformer.make.

base_model <- BaseModelBert$new()

base_model$configure(

tokenizer = tokenizer,

max_position_embeddings = 512,

hidden_size = 64,

num_hidden_layers = 4,

num_attention_heads = 4,

intermediate_size = 2 * 64,

hidden_act = "GELU",

hidden_dropout_prob = 0.1,

attention_probs_dropout_prob = 0.1

)We first create an empty model with new. In the next

step you can configure your model. The available parameter depend on the

type of model. In this example we create a model with 4 hidden layers, a

hidden size of 64, and 4 attention heads. The maximum length of the

sequence of tokens is 512. Since the tokenizer granularity rate is

2.8523643 this allows the model to process a sequence of average

1460.4105327 words.

Please note that with max_position_embeddings you

determine how many tokens your transformer can process. If your text has

more tokens, these tokens are ignored. However, if you would like to

analyze long documents, please avoid to increase this number too

significantly because the computational time does not increase in a

linear way but quadratic (Beltagy, Peters & Cohan 2020). For long

documents you can use another architecture of BERT (e.g. Longformer from

Beltagy, Peters & Cohan 2020) or split a long document into several

chunks which are used sequentially for classification (e.g. Pappagari et

al. 2019). Using chunks is supported by aifedcuation for all

models.

The parameter tokenizer requires an tokenizer object.

Here we use the model created in section 3.2.

The vignette 04 Model configuration and training provides details on how to configure a base model.

Now your model is ready. You can save it with the function

save_to_disk or continue with training.

3.3 Train/Fine-Tune a Base Model

If you would like to train a new base model (see section 3.2) for the

first time or want to adapt a pre-trained model to a domain-specific

language or task, you can call the corresponding

train-method.

base_model$train(

text_dataset = data_set_reviews_text,

p_mask = 0.30,

whole_word = TRUE,

val_size = 0.1,

n_epoch = 10,

batch_size = 12,

max_sequence_length = 250,

full_sequences_only = FALSE,

min_seq_len = 50,

learning_rate = 3e-3,

sustain_track = TRUE,

sustain_iso_code = "DEU",

sustain_region = NULL,

sustain_interval = 15,

trace = TRUE,

pytorch_trace = 0,

log_dir = NULL,

log_write_interval = 2

)

#> 2025-11-18 07:10:07 Start Sustainability Tracking

#> 2025-11-18 07:10:08 Prepare Data for Training

#> 2025-11-18 07:10:09 Calculate Flops Based on Architecture

#> 2025-11-18 07:10:10 Create Data Collator

#> 2025-11-18 07:10:10 Create Trainer

#> 2025-11-18 07:10:10 Using Whole Word Masking

#> 2025-11-18 07:10:10 Start Training

#> 2025-11-18 07:10:35 Stop Sustainability Tracking

#> 2025-11-18 07:10:35 FinishThe data for training must be passed to text_dataset.

This object should be of class LargeDataSetForText as

described in section 2.2.2. In this example we use the text from the

movie reviews. Please note, that this data set is to small for training

a new transformer. We use this here only for a fast running

illustration. For real use cases a larger data set is necessary.

In case of a Bert model, the learning objective is Masked

Language Modeling. Other models may use other learning objectives.

Please refer to the documentation for more details on every model. You

set the way masking is done with p_mask and

whole_word. In the case of whole_word=FALSE a

number of tokens is masked. In the case of whole_word=TRUE

all tokens belonging to a specific number of words are masked. The

number of words/tokens is chosen with p_mask.

You can set the length of token sequences with

max_sequence_length leading the tokenizer to split long

texts into several sequences with the given size. With

val_size, you set how many of the generated sequences

should be used for the validation sample.

Since creating a transformer model is energy consuming,

aifeducation allows you to estimate its ecological impact with

help of the python library codecarbon. Thus,

sustain_track is set to TRUE by default. If

you use the sustainability tracker you must provide the alpha-3 code for

the country where your computer is located (e.g., “CAN”=“Canada”,

“DEU”=“Germany”). A list with the codes can be found on Wikipedia.

The reason is that different countries use different sources and

techniques for generating their energy resulting in a specific impact on

CO2 emissions. For the USA and Canada you can additionally specify a

region by setting sustain_region. Please refer to the

documentation of codecarbon for more information.

If you work on a machine and your graphic device only has small memory capacity, please reduce the batch size significantly.

After training has finished you can save the entire model with

save_to_disk.

save_to_disk(

object = base_model,

dir_path = "examples",

folder_name = "example_base_model"

)To use the model you can load it with

base_model <- load_from_disk("examples/example_base_model")In order to use the model for the fill-mask task you can call

fill_mask.

base_model$fill_mask(

masked_text = "This is a [MASK] movie.",

n_solutions = 5

)

#> [[1]]

#> score token token_str

#> 1 0.06480972 97 ##e

#> 2 0.03591651 106 ##t

#> 3 0.03053751 107 ##o

#> 4 0.03040557 99 ##s

#> 5 0.02923260 102 ##iHere you can provide a text with a mask token indicating the gab the model should close. As a result you get the logits, the token id and the concrete token the model suggests to replace the mask token with.

3.4 Analyzing a BaseModel

If you would like to further investigate a base model you can use some specific methods.

Training History

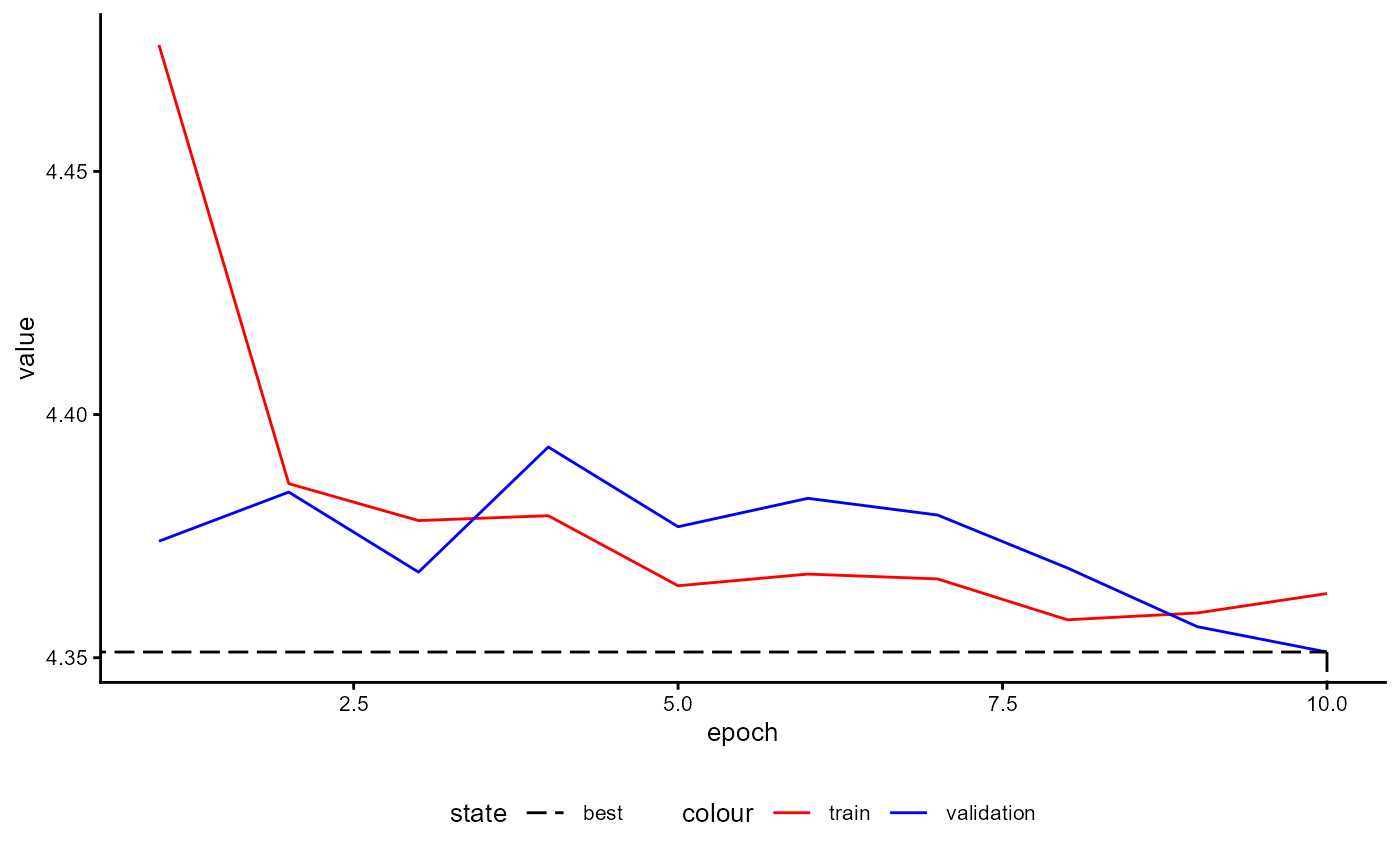

A plot of the training history of the last training can be shown as follows:

base_model$plot_training_history(

ind_best_model = TRUE

)

Figure 4: Example of a Training History for a BaseModel.

Sustainability of Training

To explore the ecological impact of the training and fine-tuning of a

model you can call get_sustainability_data("training").

# We use t() to improve the readability of the table

t(base_model$get_sustainability_data("training"))

#> [,1]

#> sustainability_tracked "TRUE"

#> date "2025-11-18 07:10:35"

#> task "training"

#> sustainability_data.duration_sec "26.45655"

#> sustainability_data.co2eq_kg "0.0002368935"

#> sustainability_data.cpu_energy_kwh "0.0003123003"

#> sustainability_data.gpu_energy_kwh "0.0002360674"

#> sustainability_data.ram_energy_kwh "7.348161e-05"

#> sustainability_data.total_energy_kwh "0.0006218493"

#> technical.tracker "codecarbon"

#> technical.py_package_version "3.0.7"

#> technical.cpu_count "12"

#> technical.cpu_model "12th Gen Intel(R) Core(TM) i5-12400F"

#> technical.gpu_count "1"

#> technical.gpu_model "1 x NVIDIA GeForce RTX 4070"

#> technical.ram_total_size "15.84258"

#> region.country_name "Germany"

#> region.country_iso_code "DEU"

#> region.region NAThe resulting table provides you detailed information on every single training run with active sustainability tracking.

Sustainability of Inference

Not only the ecological impact of the training is important but also

the energy consumption and CO2-emission during inference. You can

estimate the ecological impact by calling the method

estimate_sustainability_inference_fill_mask.

base_model$estimate_sustainability_inference_fill_mask(

text_dataset = data_set_reviews_text,

n = 30,

sustain_iso_code = "DEU",

sustain_region = NULL,

sustain_interval = 15,

trace = TRUE

)

#> 2025-11-18 07:10:36 Prepare Data

#> 2025-11-18 07:10:36 Add Masking Token

#> 2025-11-18 07:10:36 Start Sustainability Tracking

#> 2025-11-18 07:10:38 Stop Sustainability TrackingHere can you provide a text data set which forms the base for

estimating the energy conspumtion during a fill-mask task. With

n you determine the number of texts to be used.

The results of every run can be accessed via

get_sustainability_data("inference").

# We use t() to improve the readability of the table

t(base_model$get_sustainability_data("inference"))

#> [,1]

#> sustainability_tracked "TRUE"

#> date "2025-11-18 07:10:38"

#> task "FillMask"

#> sustainability_data.duration_sec "0.4780285"

#> sustainability_data.co2eq_kg "3.458752e-06"

#> sustainability_data.cpu_energy_kwh "5.630884e-06"

#> sustainability_data.gpu_energy_kwh "2.123891e-06"

#> sustainability_data.ram_energy_kwh "1.324506e-06"

#> sustainability_data.total_energy_kwh "9.079281e-06"

#> technical.tracker "codecarbon"

#> technical.py_package_version "3.0.7"

#> technical.cpu_count "12"

#> technical.cpu_model "12th Gen Intel(R) Core(TM) i5-12400F"

#> technical.gpu_count "1"

#> technical.gpu_model "1 x NVIDIA GeForce RTX 4070"

#> technical.ram_total_size "15.84258"

#> region.country_name "Germany"

#> region.country_iso_code "DEU"

#> region.region NA

#> data "empirical data"

#> n "30"

#> batch "1"

#> min_seq_len NA

#> mean_seq_len NA

#> sd_seq_len NA

#> max_seq_len NAFLOPS Estimates

Another important value is the total number of FLOPS during training.

These are estimated during every training run. With

get_flops_estimates you receive a table.

# We use t() to improve the readability of the table

t(base_model$get_flops_estimates())

#> [,1]

#> date "2025-11-18 07:10:10"

#> approach "architecture-based"

#> package "calflops"

#> version "0.3.2"

#> n_parameter "184200"

#> batch_size "12"

#> n_batches "85"

#> n_epochs "10"

#> flops_bp_1 "3.124416e+12"

#> flops_bp_2 "4.686624e+12"

#> flops_bp_3 "6.248831e+12"

#> flops_bp_4 "7.811039e+12"

#> flops_counted NAAt the moment only an architecture-based approach is available. The number of FLOPS is not counted directly. Instead it is estimated approximately. The column ‘flops_bp_1’ describes the number of FLOPS for all forwand and backward passes.

Kaplan et al. (2020, p. 7) propose that as a rule of thumb the backward pass has the FLOPS of the forward pass multiplied with factor 2. The resulting FLOPS for all forward and backward passes of a training run are shown in column ‘flops_bp_2’.

All columns do not include computations for a validation or test sample.

3.5 Creating a BaseModel from Hugging Face

In the case you would like to use a pre-trained model from hugging face you can create a base model as follows:

- Chose a model on Hugging Face. Please ensure that the model’s architecture is supported by aifeducation.

- Download the relevant files (model and tokenizer) into a folder on your machine.

- Create an empty base model for the corresponding architecture.

- Call the method

create_from_hfand provide the path the the folder where you stored the model and the tokenizer.

base_model_bert <- BaseModelBert$new()

base_model_bert$create_from_hf(

model_dir = "examples/bert_uncased",

tokenizer_dir = "examples/bert_uncased"

)Now you can use the model as all other base models in aifedcuation. For example, you can train the model as described above or you can use the model as foundation for a TextEmbeddingModel.

4 Text Embedding Models

4.1 Introduction

The text embedding model is used to transform raw texts into

numerical representations. In order to create a new model, you need a

base model that provides the ability to understand natural language. A

text embedding model is stored as an object of class

TextEmbeddingModel.

In aifedcuation, the transformation of raw texts into numbers is a separate step from downstream tasks such as classification. This is to reduce computational time on machines with low performance. By separating text embedding from other tasks, the text embedding has to be calculated only once and can be used for different tasks at the same time. Another advantage is that the training of the downstream tasks involves only the downstream tasks an not the parameters of the embedding model, making training less time-consuming, thus decreasing computational intensity. Finally, this approach allows the analysis of long documents by applying the same algorithm to different parts of a text.

The text embedding model provides a unified interface: After creating the model with different methods, the handling of the model is always the same.

4.2 Create a Text Embedding Model

First you have to choose the base model that forms the foundation of

your new text embedding model. In order to illustrate its use we apply a

pre-trained model from Hugging

Face called BERT base

model (uncased) published by Devlin et al. (2019). Download all

files into a new folder. Here we store the model in

"examples/bert_uncased".

We first load the base model as described in section 3.

base_model_bert <- BaseModelBert$new()

base_model_bert$create_from_hf(

model_dir = "examples/bert_uncased",

tokenizer_dir = "examples/bert_uncased"

)Now we can create the TextEmbeddingModel. Therefor we have to passe

the base model to the parameter base_model.

bert_modeling <- TextEmbeddingModel$new()

bert_modeling$configure(

model_label = "Text Embedding via BERT",

model_language = "english",

max_length = 512,

chunks = 4,

overlap = 30,

emb_layer_min = 6,

emb_layer_max = 8,

emb_pool_type = "Average",

base_model = base_model_bert

)Using a BERT model for text embedding is not a problem since your

text does not provide more tokens than the transformer can process. This

maximum value is set in the configuration of the transformer (see

section 3.3). If the text produces more tokens, the last tokens are

ignored. In some instances you might want to analyze long texts. In

these situations, reducing the text to the first tokens (e.g. only the

first 512 tokens) could result in a problematic loss of information. To

deal with these situations, you can configure a text embedding model in

aifecuation to split long texts into several chunks which are

processed by the base model. The maximum number of chunks is set with

chunks. In our example above, the text embedding model

would split a text consisting of 1024 tokens into two chunks with every

chunk consisting of 512 tokens. For every chunk, a text embedding is

calculated. As a result, you receive a sequence of embeddings. The first

embedding characterizes the first part of the text and the second

embedding characterizes the second part of the text (and so on). Thus,

our sample text embedding model is able to process texts with about

tokens. This approach is inspired by the work by Pappagari et

al. (2019).

Since transformers are able to account for the context, it may be

useful to interconnect every chunk to bring context into the

calculations. This can be done with overlap to determine

how many tokens of the end of a prior chunk should be added to the next.

In our example the last 30 tokens of the prior chunks are added at the

beginning of the following chunk. This can help to add the correct

context of the text sections into the analysis. Altogether, this model

can analyse a maximum of

tokens of a text.

Finally, you have to decide from which hidden layer(s) the embeddings

should be drawn. With emb_layer_min and

emb_layer_max you can decide from which layers the average

value for every token should be calculated. Please note that the

calculation considers all layers between emb_layer_min and

emb_layer_max. In their initial work, Devlin et al. (2019)

used the hidden states of different layers for classification.

With emb_pool_type, you decide which tokens are used for

pooling within every layer. In the case of

emb_pool_type="CLS", only the cls token is used. In the

case of emb_pool_type="Average" all tokens within a layer

are averaged except padding tokens.

The vignette 04 Model configuration and training provides details on how to configure a text embedding model.

After deciding about the configuration, you can use your model.

You can see the number of learnable parameters of the underlying base model with

bert_modeling$BaseModel$count_parameter()

#> [1] 109514298Another important value is the number of features which you can

request by calling get_n_features.

bert_modeling$get_n_features()

#> [1] 768This number describes the number of dimensions for a text embedding. That is, the number of dimensions which is used to characterize the content of every chunk of text. This value is important as it determines the complexity a classifier or feature extractor has to deal with. Some of classifier’s and feature extractor’s parameters depend on this value. We elaborate this at the relevant point for the different models.

4.3 Transforming Raw Texts into Embedded Texts

To transform raw text into a numeric representation, you only have to

use the embed_large method of your model. To do this, you

must provide a LargeDataSetForText to

text_dataset. Relying on the sample data from section 2.3,

we can use the movie reviews as raw texts.

review_embeddings <- bert_modeling$embed_large(

text_dataset = data_set_reviews_text,

trace = TRUE

)

#> 2025-11-18 07:10:39 Batch 1 / 10 done

#> 2025-11-18 07:10:40 Batch 2 / 10 done

#> 2025-11-18 07:10:41 Batch 3 / 10 done

#> 2025-11-18 07:10:41 Batch 4 / 10 done

#> 2025-11-18 07:10:42 Batch 5 / 10 done

#> 2025-11-18 07:10:43 Batch 6 / 10 done

#> 2025-11-18 07:10:43 Batch 7 / 10 done

#> 2025-11-18 07:10:44 Batch 8 / 10 done

#> 2025-11-18 07:10:45 Batch 9 / 10 done

#> 2025-11-18 07:10:45 Batch 10 / 10 doneThe method embed_largecreates an object of class

LargeDataSetForTextEmbeddings. This is just a data set

consisting of the embeddings of every text. The embeddings are an array,

of which the first dimension refers to specific texts, the second

dimension refers to chunks/sequences, and the third dimension refers to

the features.

With the embedded texts you now have the input to train a new classifier or to apply a pre-trained classifier for predicting categories/classes. In the next chapter we will show you how to use these classifiers. But before we start, we will show you how to save and load your model.

4.4 Saving and Loading Embedded Texts

Since transforming raw texts into text embeddings is time and energy consuming we recommend to save them to disk in order to use the embeddings for further tasks and analysis.

To save the them just call the function save_to_disk as

shown below.

save_to_disk(

object = review_embeddings,

dir_path = "examples",

folder_name = "imdb_movie_reviews"

)To load the embeddings you can call the function

load_from_disk.

review_embeddings <- load_from_disk("examples/imdb_movie_reviews")4.5 Saving and Loading Text Embedding Models

Saving a created text embedding model is very easy in

aifeducation by using the function save_to_disk.

This function provides a unique interface for all text embedding models.

For saving your work you can pass your model to object and

the directory where to save the model to dir_path. With

folder_name you can determine the name of the folder that

should be created in that directory to store the model.

save_to_disk(

object = bert_modeling,

dir_path = "examples",

folder_name = "bert_te_model"

)In this example the model is saved in a folder at the location

examples/bert_te_model. If you want to load your model you

can call load_from_disk.

bert_modeling <- load_from_disk("examples/bert_te_model")4.6 Sustainability

In case the underlying model was trained with an active

sustainability tracker (section 3.2 and 3.3) you can receive a table

showing you the energy consumption, CO2 emissions, and hardware used

during training by calling

BaseModel$get_sustainability_data("training"). For our

example this would be

bert_modeling$BaseModel$get_sustainability_data("training").

4.7 Training History

If you would like to see the training history of the underlying base model you can call a special method.

bert_modeling$BaseModel$plot_training_history(

ind_best_model = TRUE

)Please note that this plot is not available for this example since the necessary data is not directly available for this model on Hugging Face. If you train a model with this package the training history is always saved.

5 Overview Classifiers

Classifiers are built on top of a TextEmbeddingModel.

They use the embedded texts produced by these models and predict

classes/categories. You can build your classifier with the help of two

components.

First, you choose a core model. It determines where different layers are located and how the outputs of the different layers are combined into the final output of the model.

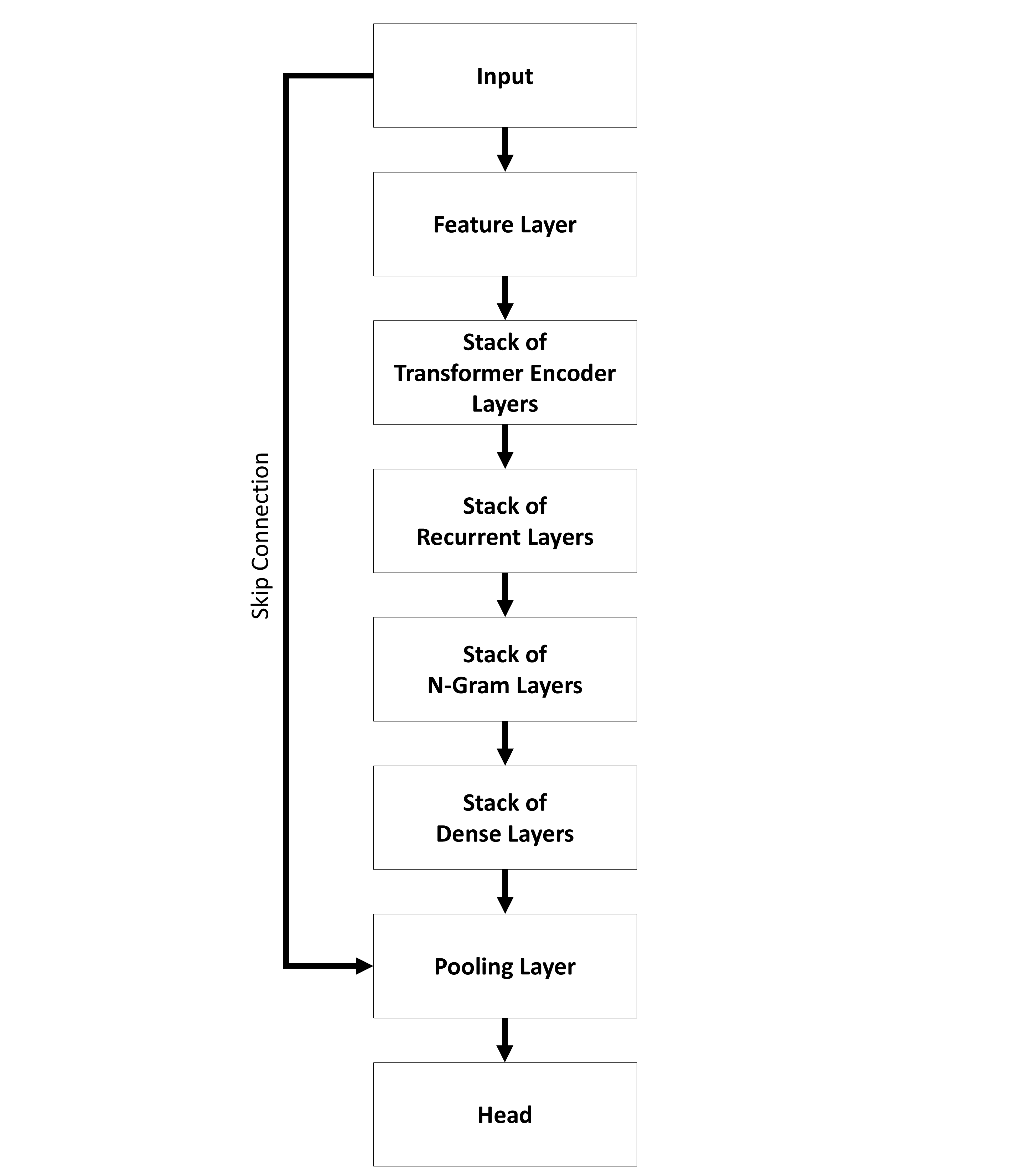

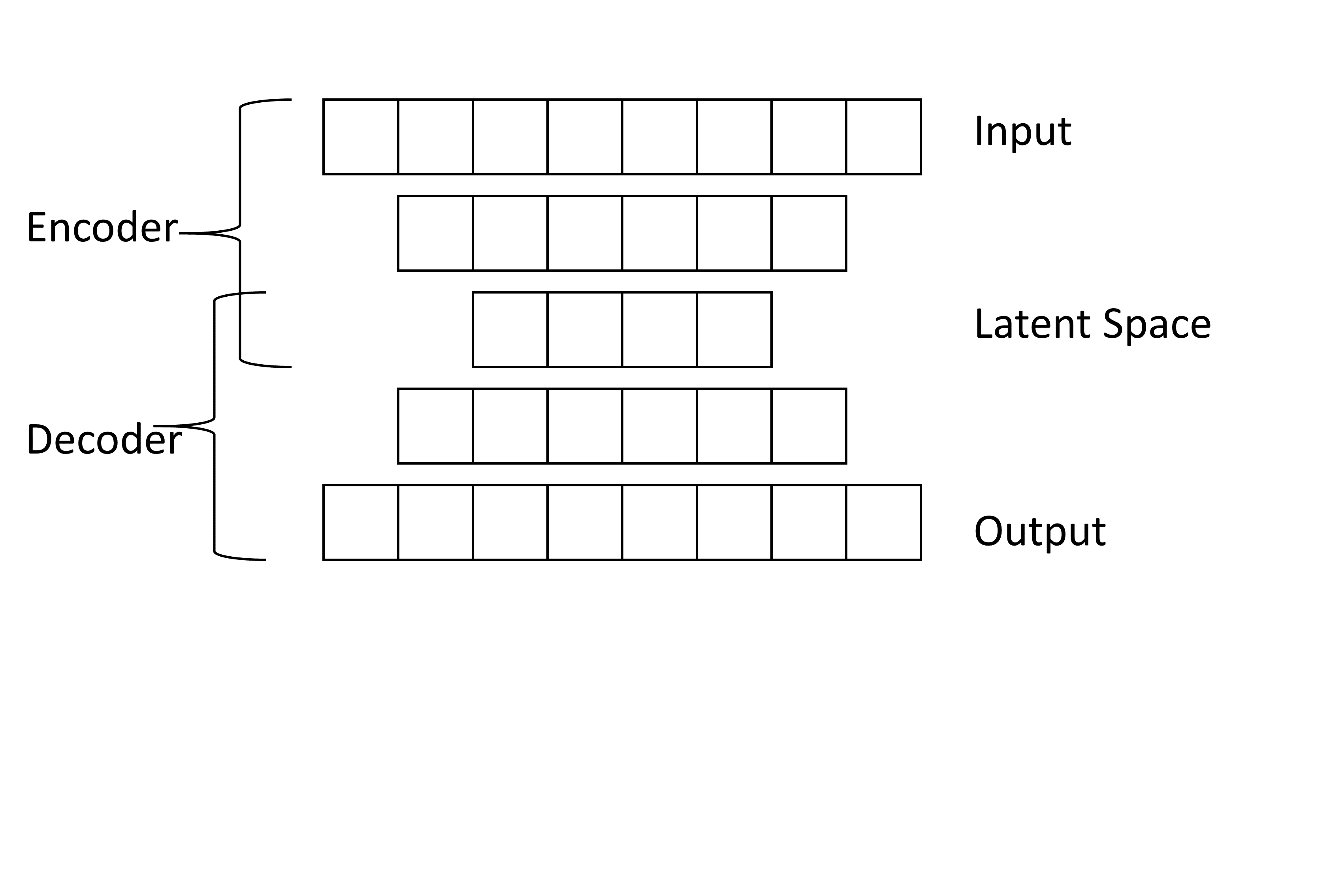

The sequential architecture (Figure 5) provides models where the input is passed to a specific number of layers step by step. All layers are grouped by their kind into stacks.

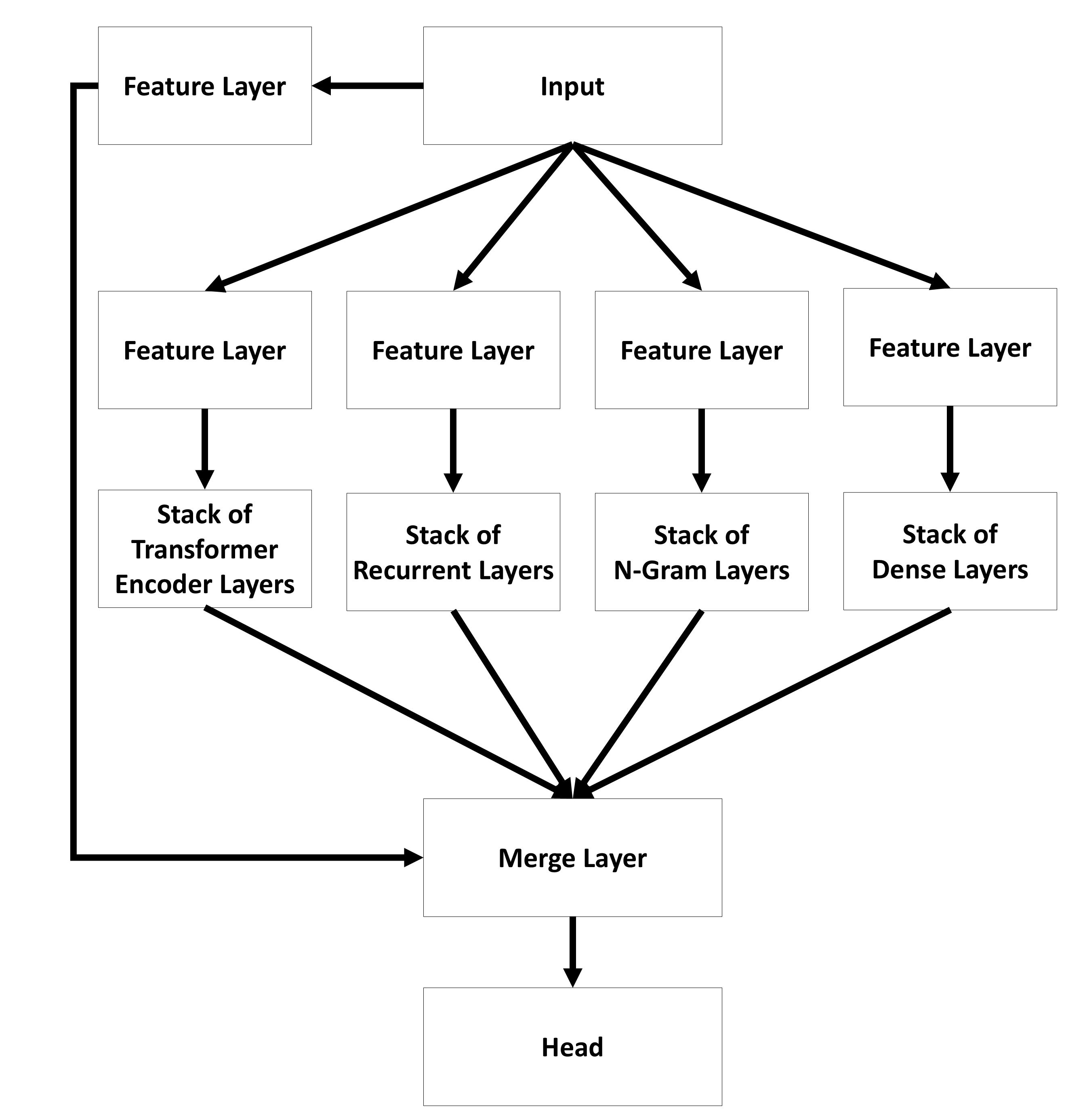

In contrast, the parallel architecture (Figure 6) offers a model where an input is passed to different types of layers separately. At the end the outputs are combined to create the final output of the whole model.

You can find the name of the used core model in the name of the

classifiers. For example TEClassifierSequential uses a

sequential core model while TEClassifierParallel

uses a parallel core model.

In general, all layers within a core model allow further customization allowing you to build a high number of different models.

A detailed description of all layers can be found in vignette A01 Layers and Stack of Layers:

Second, you can choose how the core model is used for classification. At the moment a probability and metric based classifier is possible.

- Probability Based Classifiers: Probability classifiers are used to predict a probability distribution for different classes/categories. This is the standard case most common in literature.

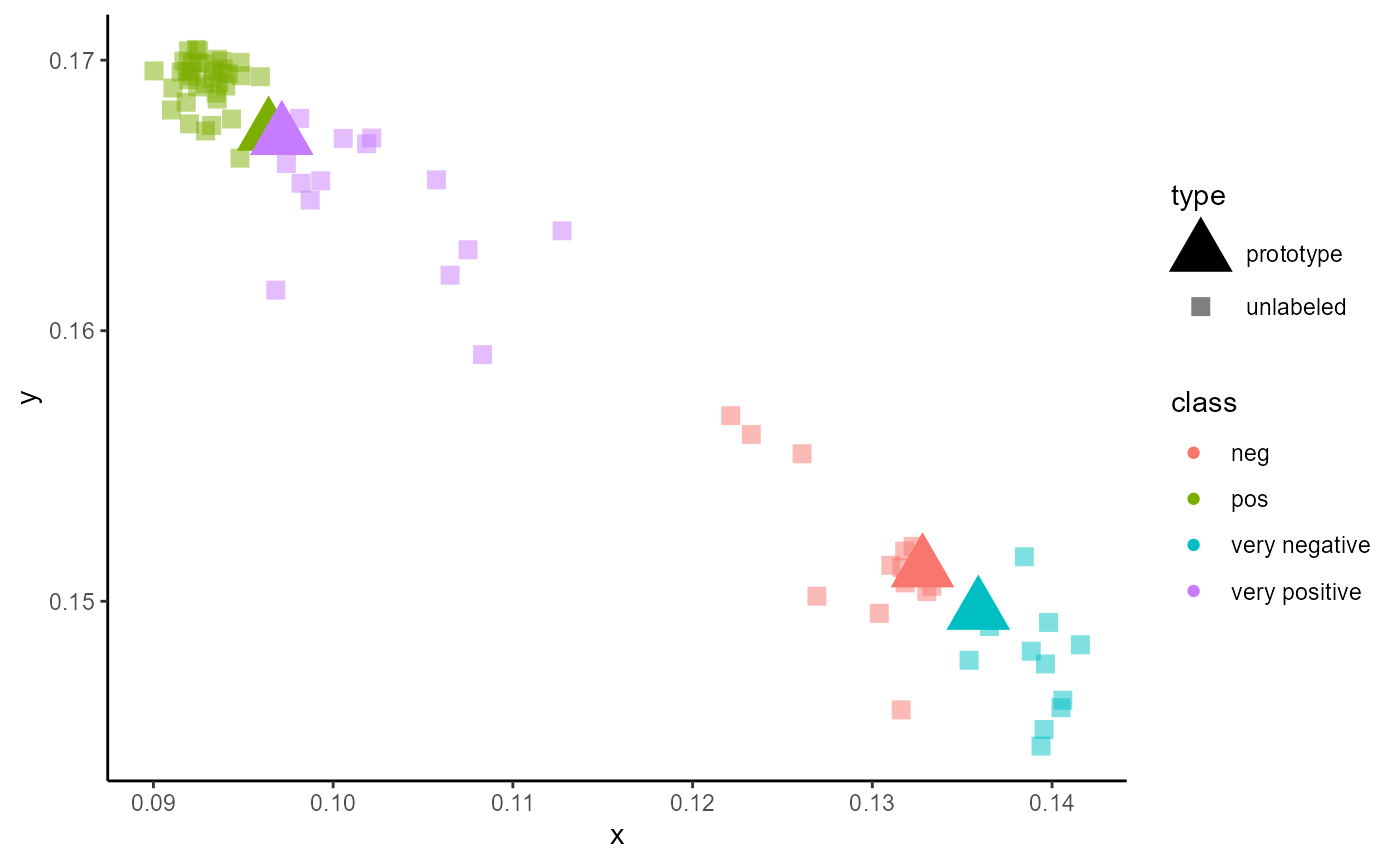

- Prototype Based Classifiers: Prototype based classifiers are a kind of metric based classifiers. Here the classifiers do not predict a probability distribution. Instead it calculates a prototype for every class/category and measures the distance between a case and all prototypes. The class/category of the prototype with the smallest distance to the case is assigned to that case. In contrast to the probability classifiers these models can handle classes/categories that were not part of the training. For more details please refer to section 7.

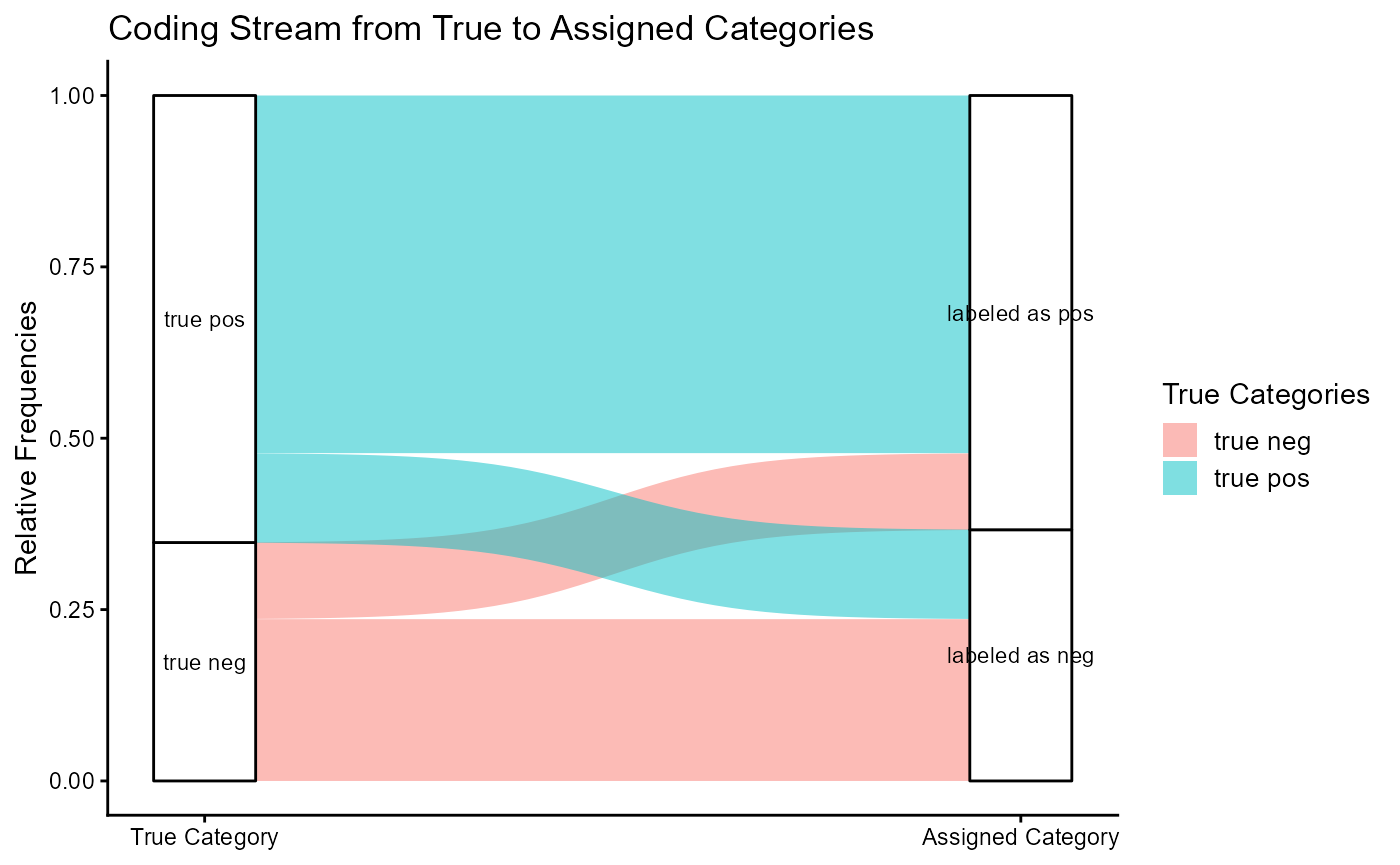

The training of a classifier consists of two stages. The first stage aims to estimate the performance of the model while the second stage trains the model for application.

For performance estimation, training splits the data into several

chunks based on cross-fold validation. The number of folds is set with

data_folds. In every case, one fold is not used for

training and serves as a test sample. The remaining data is

used to create a training and a validation sample. The

percentage of cases within each fold used as a validation sample is

determined with data_val_size. This sample is used to

determine the state of the model that generalizes best. All performance

values saved in the trained classifier refer to the test sample. This

data has never been used during training and provides a more realistic

estimation of a classifier’s performance.

Since aifedcuation tries to address the special needs in educational and social science, some special training steps are integrated for all models.

Synthetic Cases: In case of imbalanced data, it is recommended to augment your data. Before training, a number of new units is created via different techniques. Currently you can request the K-Nearest Neighbor OveRsampling Approach (KNNOR) developed by Islam et al. (2022). The aim is to create new cases that fill the gap to the majority class. Multi-class problems are reduced to a two class problem (class under investigation vs. all others) for generating these units. If the technique allows to set the number of neighbors during generation, you can configure the data generation with

sc_min_kandsc_max_k. The synthetic cases for every class are generated for all k betweensc_min_kandsc_max_k. Every k contributes proportionally to the synthetic cases.Pseudo-Labeling: This technique is relevant if you have labeled target data and a large number of unlabeled target data. The idea is to integrate the unlabeled into training to increase performance and to avoid high costs by labeling a high number of cases manually. With the different parameters starting with

pl_, you can configure the process of pseudo-labeling. Implementation of pseudo-labeling is based on Cascante-Bonilla et al. (2020). To apply pseudo-labeling, you have to setuse_pl=TRUE.pl_max=1.00,pl_anchor=1.00, andpl_min=0.00are used to describe the certainty of a prediction. 0 refers to random guessing while 1 refers to perfect certainty.pl_anchoris used as a reference value. The distance topl_anchoris calculated for every case. Then, they are sorted with an increasing distance frompl_anchor. The proportion of added pseudo-labeled data into training increases with every step. The maximum number of steps is determined withpl_max_steps. Cases close topl_anchorare included first.

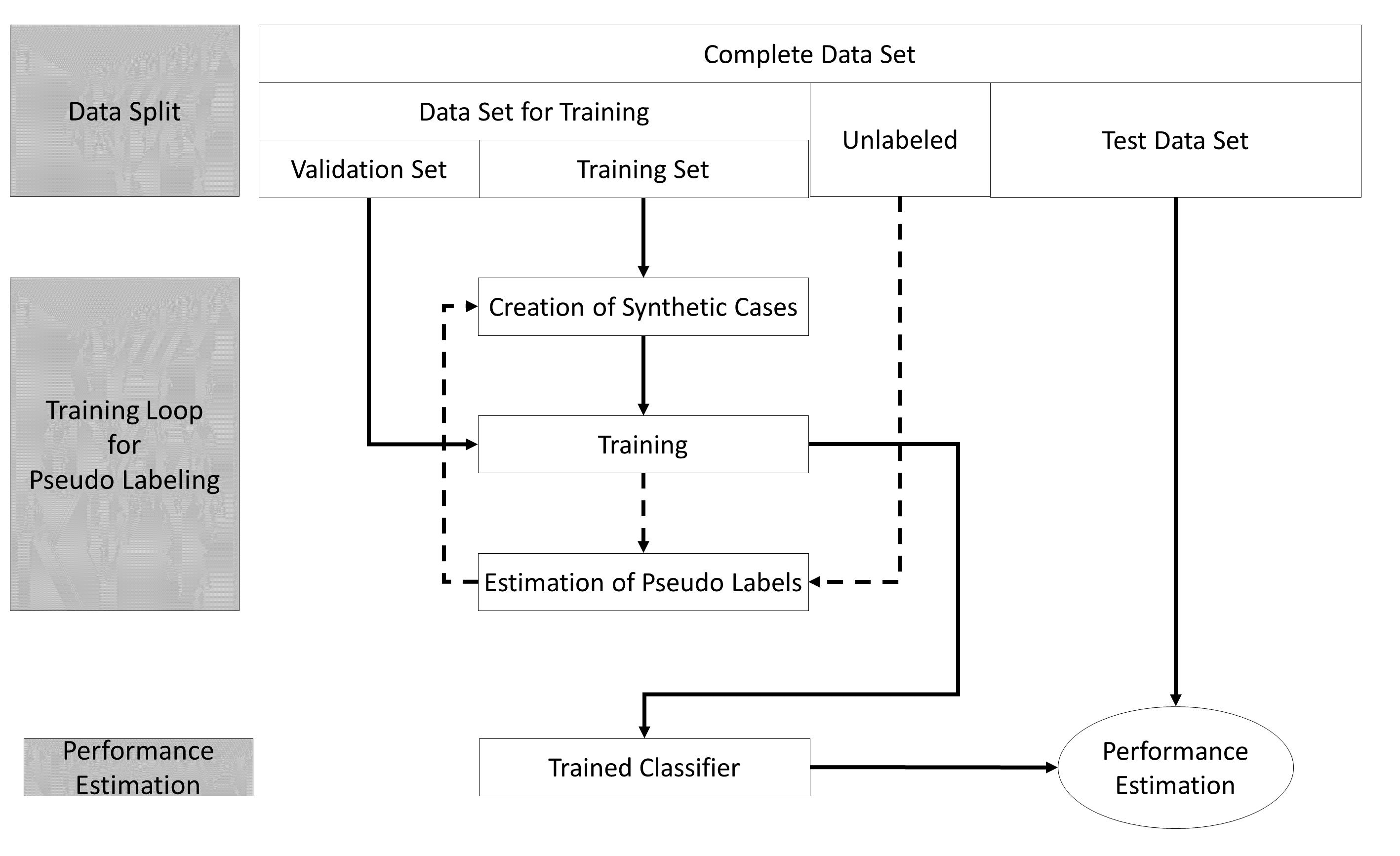

Figure 7 illustrates the training loop for the cases that all options

are set to TRUE.

The example stated in the Figure applies the generation of synthetic

cases and the algorithm proposed by Cascante-Bonilla et al. (2020). For

every fold, the training starts with generating synthetic cases to fill

the gap between the classes and the majority class. After this, an

initial training of the classifiers starts. The trained classifier is

used to predict pseudo-labels for the unlabeled part of the data and

adds 20% of the cases with the highest certainty for their pseudo-labels

to the training data set. Now new synthetic cases are generated based on

both the labeled data and the newly added pseudo-labeled data. The

classifier is re-initialized and trained again. After training, the

classifier predicts the potential labels of all originally

unlabeled data and adds 40% of the pseudo-labeled data to the training

data with the highest certainty. Again, new synthetic cases are

generated on both the labeled and added pseudo-labeled data. The model

is again re-initialized and trained again until the maximum number of

steps for pseudo labeling (pl_max_steps) is reached. After

this, the logarithm is restated for the next fold until the number of

folds (data_folds) is reached. All of these steps are only

used to estimate the performance of the classifier to evaluate for

unknown data.

The last phase of the training begins after the last fold. In the final training, the data set is split only into a training and validation set without a test set to provide the maximum amount of data for the best performance in final training. All configurations of the performance estimation phase are used in the final training phase.

Please note that creating, training, and predicting works for all types of classifiers as described in the chapters below.

6 Probability Based Classifiers

6.1 Creation

To show you how to create a classifier we use a classifier of class

TEClassifierSequential as an example. With the sample data

from section 2.3 and the text embeddings from section 4.3, the creation

of a new classifier may look like:

classifier <- TEClassifierSequential$new()

classifier$configure(

label = "Classifier for Estimating a Postive or Negative Rating of Movie Reviews",

text_embeddings = review_embeddings,

feature_extractor = NULL,

target_levels = c("neg", "pos"),

skip_connection_type = "ResidualGate",

cls_pooling_features = 50,

cls_pooling_type = "MinMax",

cls_head_type = "PairwiseOrthogonalDense",

feat_act_fct = "ELU",

feat_size = 384,

feat_bias = TRUE,

feat_dropout = 0.0,

feat_parametrizations = "None",

feat_normalization_type = "LayerNorm",

ng_conv_act_fct = "ELU",

ng_conv_n_layers = 1,

ng_conv_ks_min = 2,

ng_conv_ks_max = 4,

ng_conv_bias = FALSE,

ng_conv_dropout = 0.1,

ng_conv_parametrizations = "None",

ng_conv_normalization_type = "LayerNorm",

ng_conv_residual_type = "ResidualGate",

dense_act_fct = "ELU",

dense_n_layers = 0,

dense_dropout = 0.00,

dense_bias = FALSE,

dense_parametrizations = "None",

dense_normalization_type = "LayerNorm",

dense_residual_type = "ResidualGate",

rec_act_fct = "Tanh",

rec_n_layers = 0,

rec_type = "GRU",

rec_bidirectional = FALSE,

rec_dropout = 0.2,

rec_bias = FALSE,

rec_parametrizations = "None",

rec_normalization_type = "LayerNorm",

rec_residual_type = "ResidualGate",

tf_act_fct = "ELU",

tf_dense_dim = 400,

tf_n_layers = 0,

tf_dropout_rate_1 = 0.1,

tf_dropout_rate_2 = 0.3,

tf_attention_type = "MultiHead",

tf_positional_type = "absolute",

tf_num_heads = 1,

tf_bias = FALSE,

tf_parametrizations = "None",

tf_normalization_type = "LayerNorm",

tf_normalization_position = "Pre",

tf_residual_type = "ResidualGate"

)Similarly to the text embedding model, you should provide a label

(label) for your new classifier. With

text_embeddings you have to provide a

LargeDataSetForTextEmbeddings. The data set is created with

a TextEmbeddingModel as described in section 4. We here

continue our example and use the embeddings produced by our BERT

model.

target_levels take the categories/classes you classifier

should predict. This can be numbers or even words.

In case you would like to use ordinal data, it is very important that you provide the classes/categories in the correct order. That is, classes/categories representing a “higher” level must be stated after categories/classes with a “lower” level. If you provide the wrong order, the performance indices are not valid. In case of nominal data the order does not matter.

With feature_extractor you can add a feature extractor

that tries to reduce the number of features of your text embeddings

before passing the embeddings to the classifier. You can read more on

this in section 8.

With the help of the other parameters you can define the complexity and abilities of your model. A description of the different models can be found in A01 Layers and Stack of Layers.

Please note that you have to choose the parameter

feat_size depending on the number of features of the

underlying text embedding model. You can request this number by calling

the method get_n_features of the used text embedding model.

In our example this would be:

bert_modeling$get_n_features()

#> [1] 768The number for feat_size should be equal to or less than

this value, since this layer tries to compress the text embeddings into

fewer dimensions. While this reduces the number of of parameters for all

subsequent layers and decreases training and usage time, it may result

in information loss. You can experiment with this value to strike a

balance between speed and performance. A study by Takeshita et

al. (2025) shows that randomly dropping 50% of the dimensions leads only

to a minor drop in performance in classification tasks. Thus, this value

can serve as a rule of thumb for determining this parameter.

In addition, the parameter cls_pooling_features should

be equal or less the number you used for feat_size. With

cls_pooling_features you determine how many of the

resulting features should be used for classification. Thus, this value

acts as a filter.

The vignette 04 Model configuration and training provides details on how to configure a classifier.

In our example we use only two n-gram layers

(ng_conv_n_layers = 1) and one dense layers

(dense_n_layers = 0). All other layers are omitted from the

model by setting the number of layers to zero

(tf_n_layers = 0,rec_n_layers = 0).

As pooling method we use minimum and maximum

(cls_pooling_type="MinMax"). That is, the 25 highest and

the 25 lowest features are used for calculating the classes/labels. The

number of values can be determined with

cls_pooling_features=50.

In this example we use a special classification head

(cls_head_type = "PairwiseOrthogonal") proposed by (Li et

al. (2020))[https://doi.org/10.1109/TIP.2020.2990277]. This layer

allows every neuron only to establish a connection to a single class. In

contrast to regular dense layers

(cls_head_type = "Regular") where all neurons of the

previous layer are connected all classes. We add a dense layer before

the classification head

(cls_head_type = "PairwiseOrthogonalDense") in order to

improve performance with high rates for dropout in the previous

layers.

After you have created a new classifier, you can begin training. You can see the number of learnable parameters of your model with:

classifier$count_parameter()

#> [1] 7409856.2 Training

To start the training of your classifier, you have to call the

train method. Similarly, for the creation of the

classifier, you must provide the text embeddings to

data_embeddings and the categories/classes as target data

to data_targets. Please remember that

data_targets expects a named factor where

the names correspond to the IDs of the corresponding text embeddings.

Text embeddings and target data that cannot be matched are omitted from

training.

classifier$train(

data_embeddings = review_embeddings,

data_targets = review_labels,

data_folds = 10,

data_val_size = 0.25,

loss_balance_class_weights = TRUE,

loss_balance_sequence_length = TRUE,

loss_cls_fct_name = "FocalLoss",

use_sc = FALSE,

sc_method = "knnor",

sc_min_k = 1,

sc_max_k = 10,

use_pl = FALSE,

pl_max_steps = 3,

pl_max = 1.00,

pl_anchor = 1.00,

pl_min = 0.00,

sustain_track = TRUE,

sustain_iso_code = "DEU",

sustain_region = NULL,

sustain_interval = 15,

epochs = 800,

batch_size = 32,

trace = TRUE,

ml_trace = 0,

log_dir = NULL,

log_write_interval = 10,

n_cores = auto_n_cores(),

lr_rate = 3e-2,

lr_warm_up_ratio = 0.05,

optimizer = "AdamW"

)

#> 2025-11-18 07:10:49 Total Cases: 300 Unique Cases: 300 Labeled Cases: 225

#> 2025-11-18 07:10:49 Start

#> 2025-11-18 07:10:49 Start Sustainability Tracking

#> 2025-11-18 07:10:51 | Iteration 1 from 10

#> 2025-11-18 07:10:51 | Iteration 1 from 10 | Training

#> 2025-11-18 07:12:10 | Iteration 2 from 10

#> 2025-11-18 07:12:10 | Iteration 2 from 10 | Training

#> 2025-11-18 07:13:30 | Iteration 3 from 10

#> 2025-11-18 07:13:30 | Iteration 3 from 10 | Training

#> 2025-11-18 07:14:50 | Iteration 4 from 10

#> 2025-11-18 07:14:50 | Iteration 4 from 10 | Training

#> 2025-11-18 07:16:08 | Iteration 5 from 10

#> 2025-11-18 07:16:08 | Iteration 5 from 10 | Training

#> 2025-11-18 07:17:29 | Iteration 6 from 10

#> 2025-11-18 07:17:29 | Iteration 6 from 10 | Training

#> 2025-11-18 07:18:50 | Iteration 7 from 10

#> 2025-11-18 07:18:50 | Iteration 7 from 10 | Training

#> 2025-11-18 07:20:10 | Iteration 8 from 10

#> 2025-11-18 07:20:10 | Iteration 8 from 10 | Training

#> 2025-11-18 07:21:29 | Iteration 9 from 10

#> 2025-11-18 07:21:29 | Iteration 9 from 10 | Training

#> 2025-11-18 07:22:47 | Iteration 10 from 10

#> 2025-11-18 07:22:47 | Iteration 10 from 10 | Training

#> 2025-11-18 07:24:06 | Final training

#> 2025-11-18 07:24:06 | Final training | Training

#> 2025-11-18 07:25:29 Stop Sustainability Tracking

#> 2025-11-18 07:25:29 Training CompleteYou can further modify the training process with different arguments.

With loss_balance_class_weights=TRUE the absolute

frequencies of the classes/categories are adjusted according to the

‘Inverse Class Frequency’ method. This option should be activated if you

have to deal with imbalanced data.

With loss_balance_sequence_length=TRUE you can increase

performance if you have to deal with texts that differ in their lengths

and have an imbalanced frequency. If this option is enabled, the loss is

adjusted to the absolute frequencies of length of your texts according

to the ‘Inverse Class Frequency’ method.

epochs determines the maximal number of epochs. During

training, the model with the best balanced accuracy is saved and

used.

batch_size sets the number of cases to be processed

simultaneously. It is a very important parameter with a significant

impact on the model’s performance due to the generalisation gap (Keskar

et al., 2017 p. 2). The generalisation gap describes the observation

that large batch sizes tend to poor generalisation performance on test

datasets compared to models trained with a small batch size (Keskar et

al., 2017 p. 3). A study conducted by Masters and Luschi (2018) suggests

ways to achieve good batch size for using "SGD" as

optimizer. Their study shows that smaller batch sizes offer more stable

and reliable training and at the same time achieve better generalisation

performance. Batch sizes between 4 and 32 lead to the best

performance.

Please note that there are exceptions to this rule. In the context of contrastive learning, higher batch sizes tend to generate better generalisation performance (Chen et al., 2020). For more details please refer to the vignette 04 Model configuration and training

Since training a neural net is energy consuming,

aifeducation allows you to estimate its ecological impact with

the help of the python library codecarbon. Thus,

sustain_track is set to TRUE by default. If

you use the sustainability tracker you must provide the alpha-3 code for

the country where your computer is located (e.g., “CAN”=“Canada”,

“DEU”=“Germany”). A list with the codes can be found on Wikipedia.

The reason is that different countries use different sources and

techniques for generating their energy resulting in a specific impact on

CO2 emissions. For the USA and Canada, you can additionally specify a

region by setting sustain_region. Please refer to the

documentation of ‘codecarbon’ for more information.

Finally, trace and ml_trace allow you to

control how much information about the training progress is printed to

the console.

Please note that training the classifier can take some time. In case

options like the generation of synthetic cases (use_sc) or

pseudo-labeling (use_pl) are disabled, the training process

is shorter.

Please note that after performance estimation, the final training of the classifier makes use of all data available. That is, the test sample is left empty.

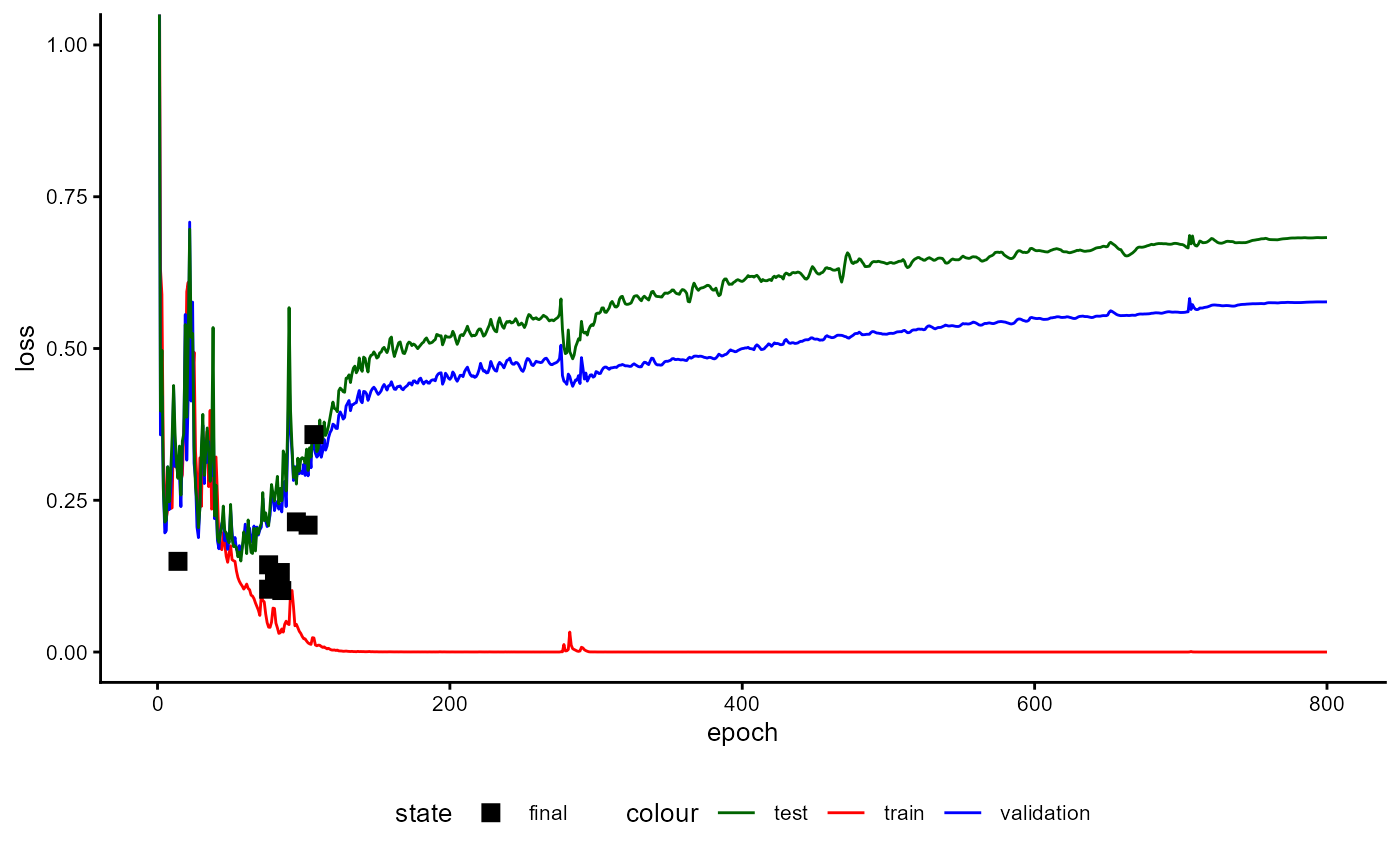

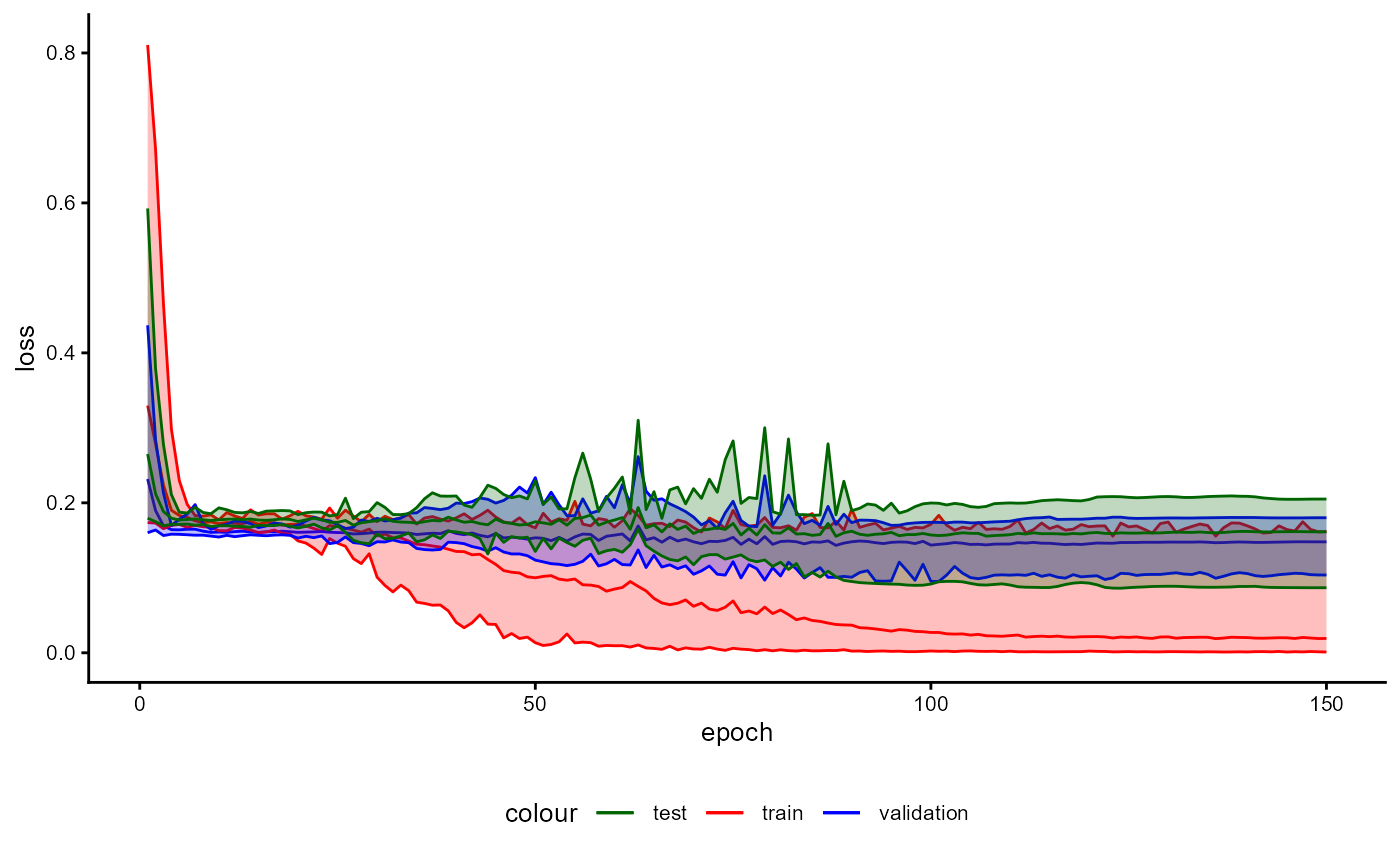

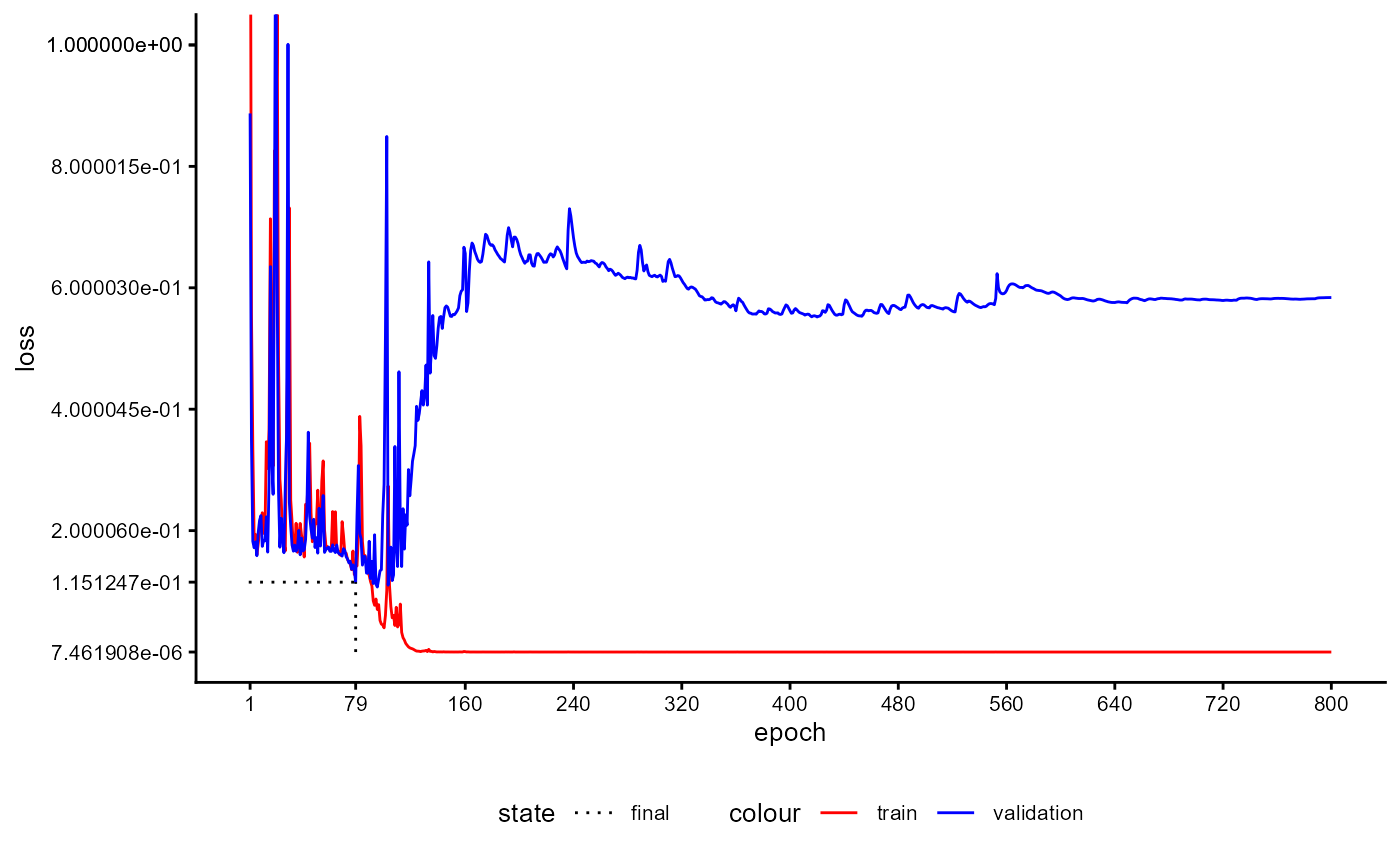

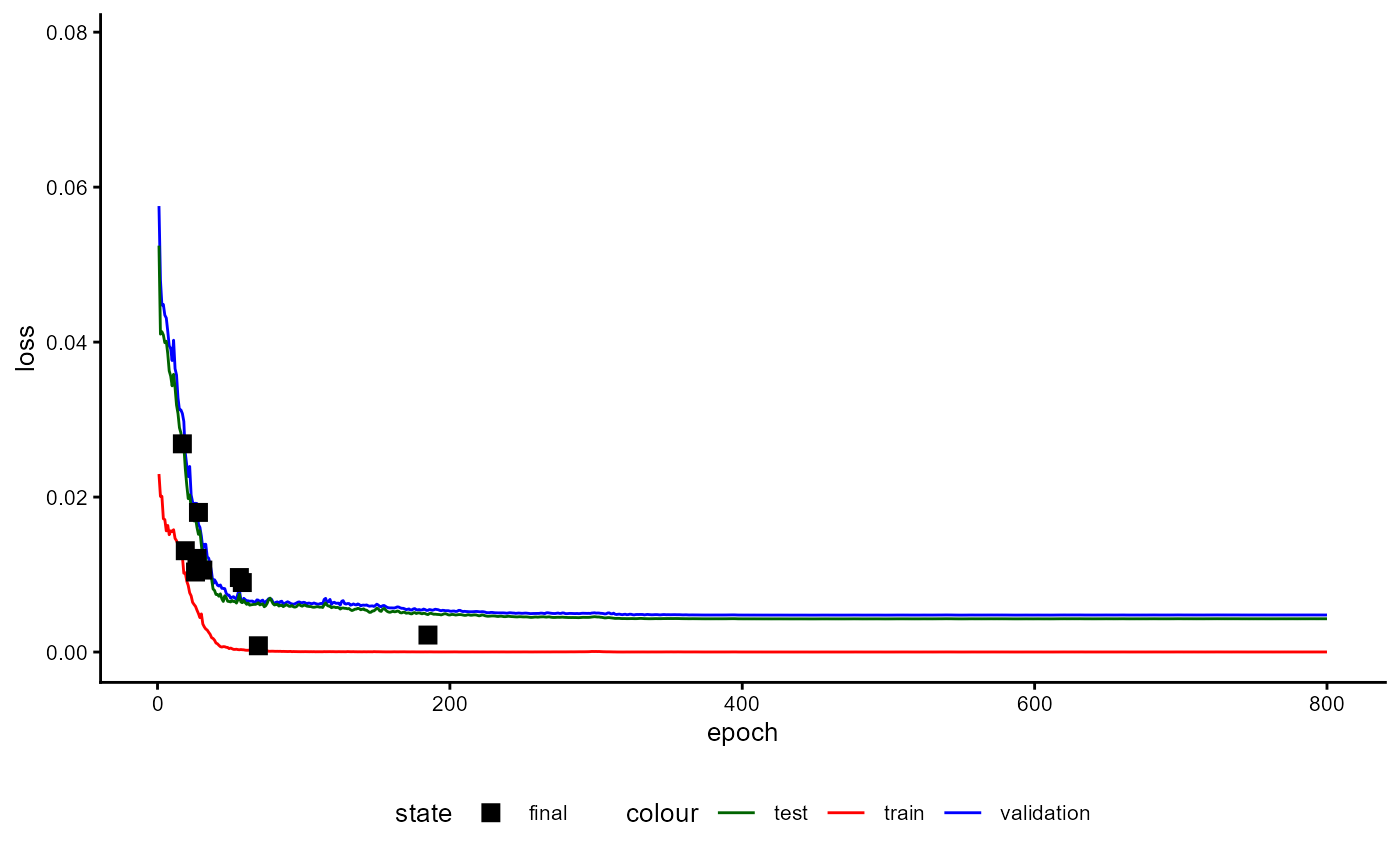

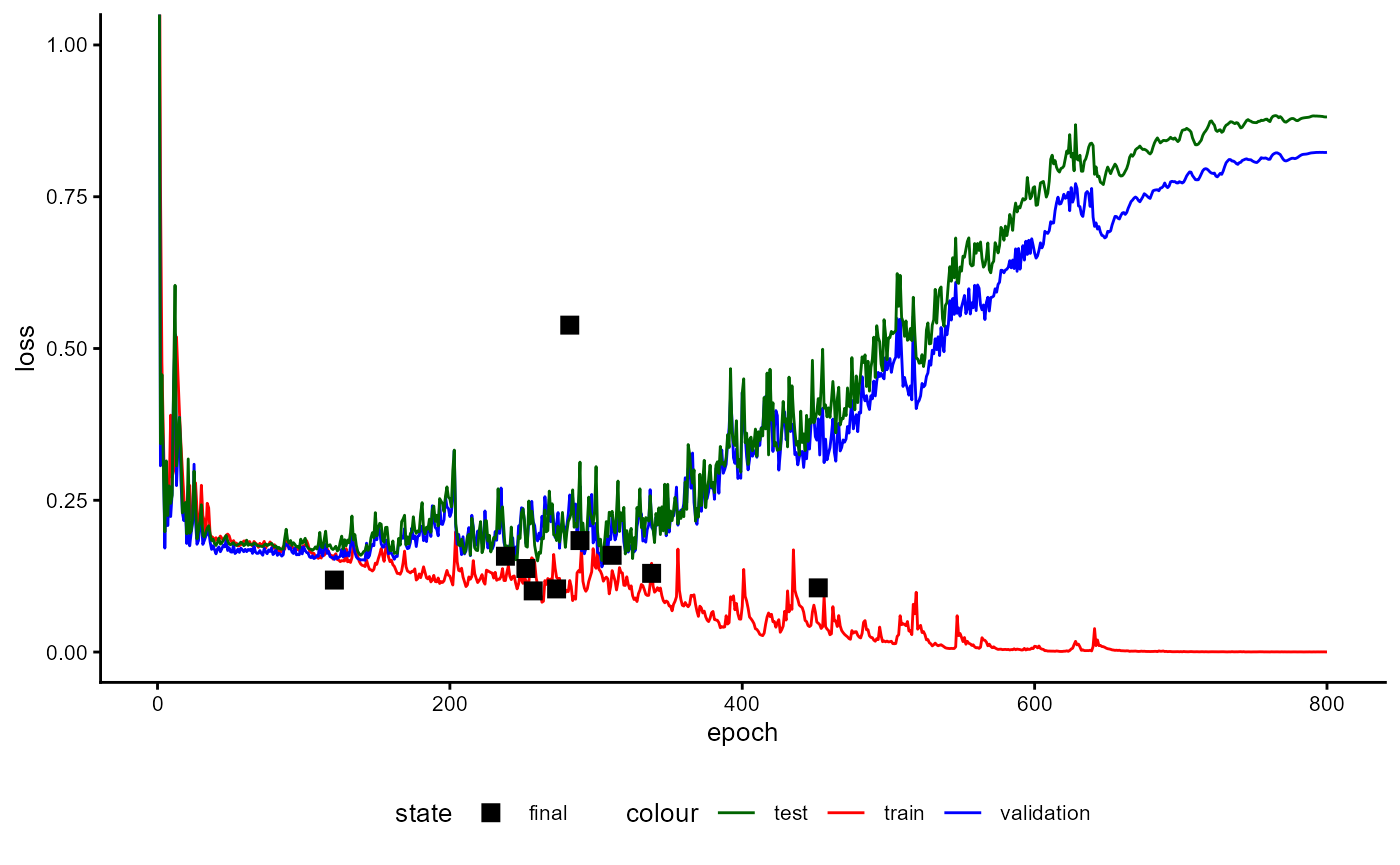

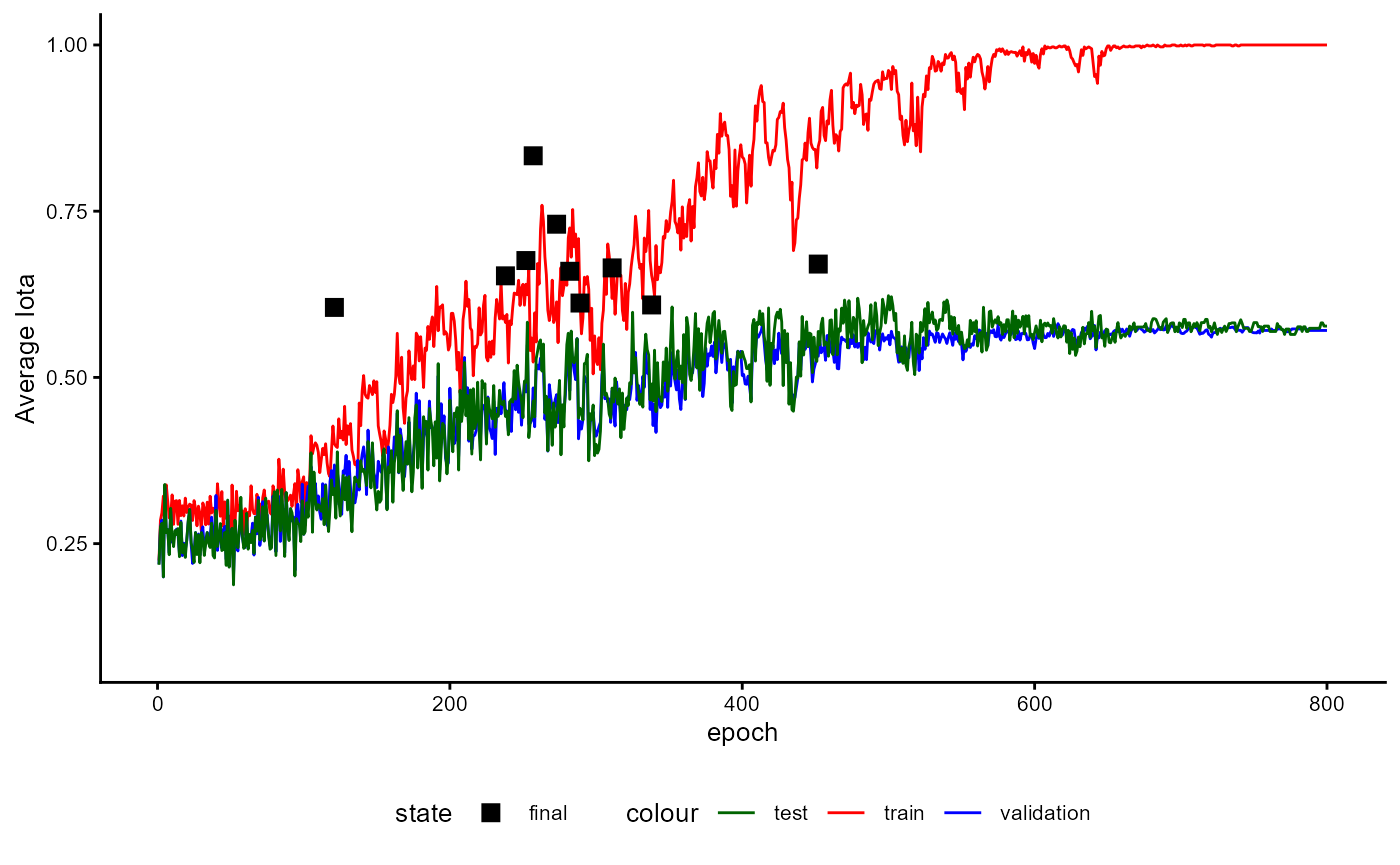

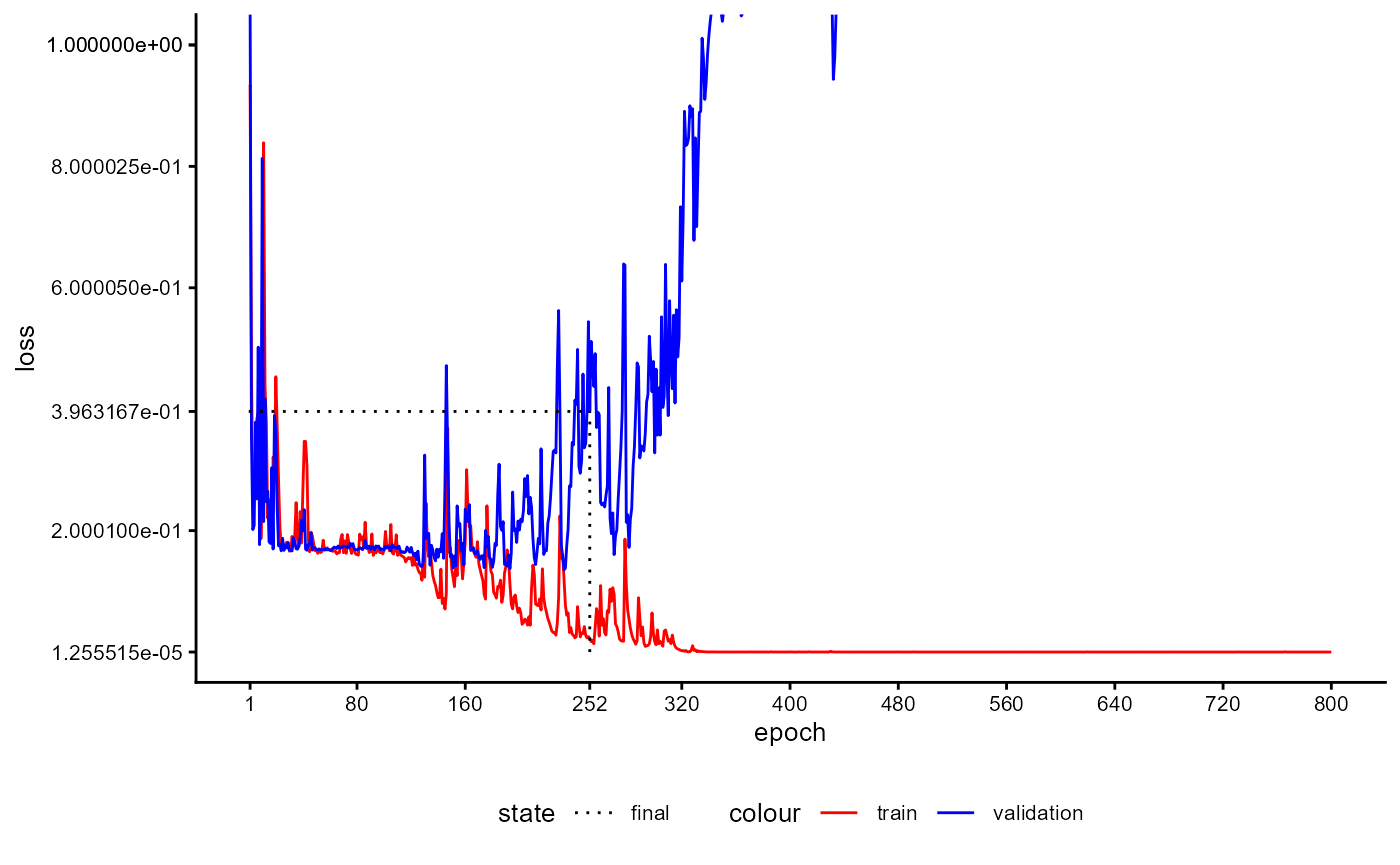

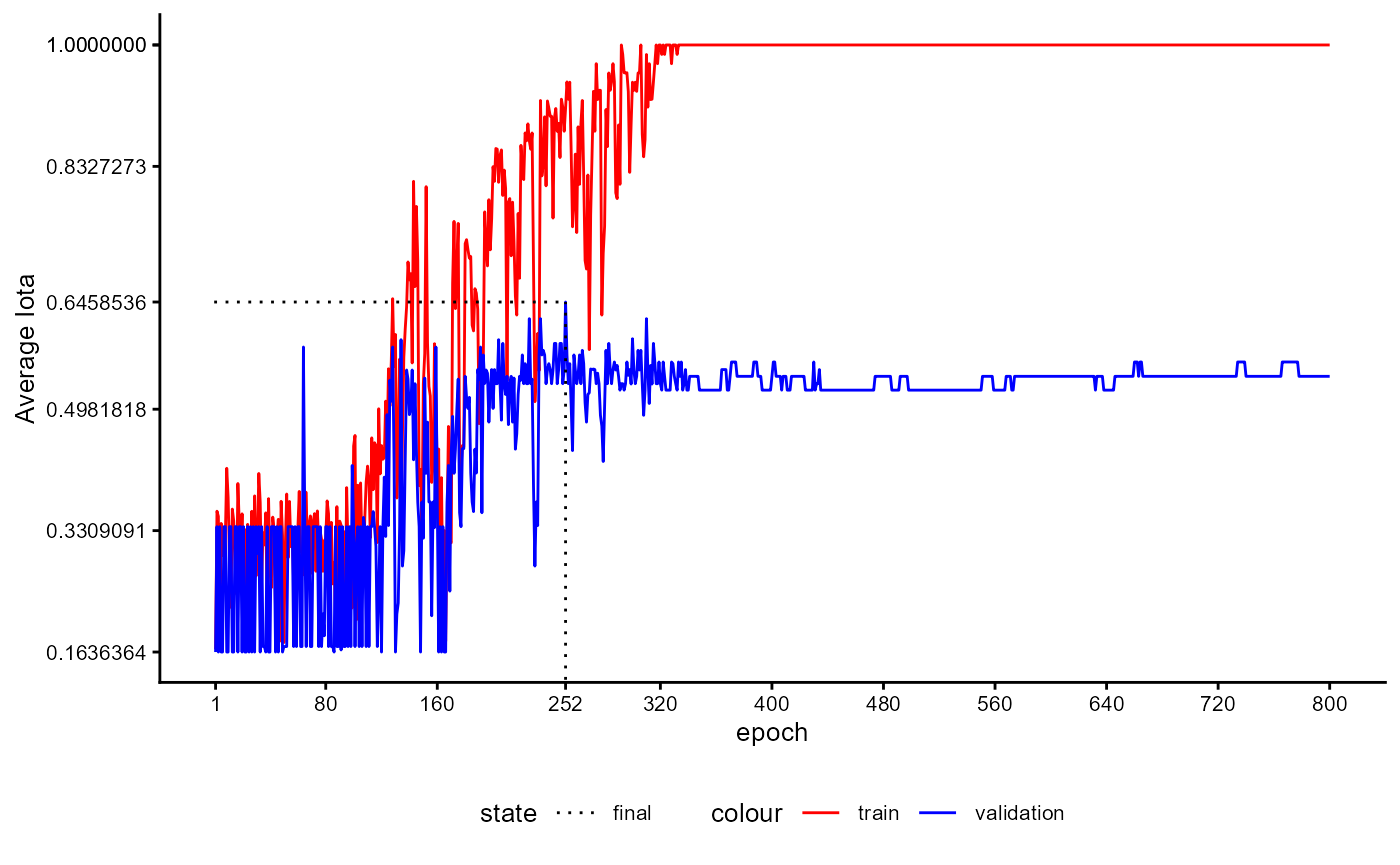

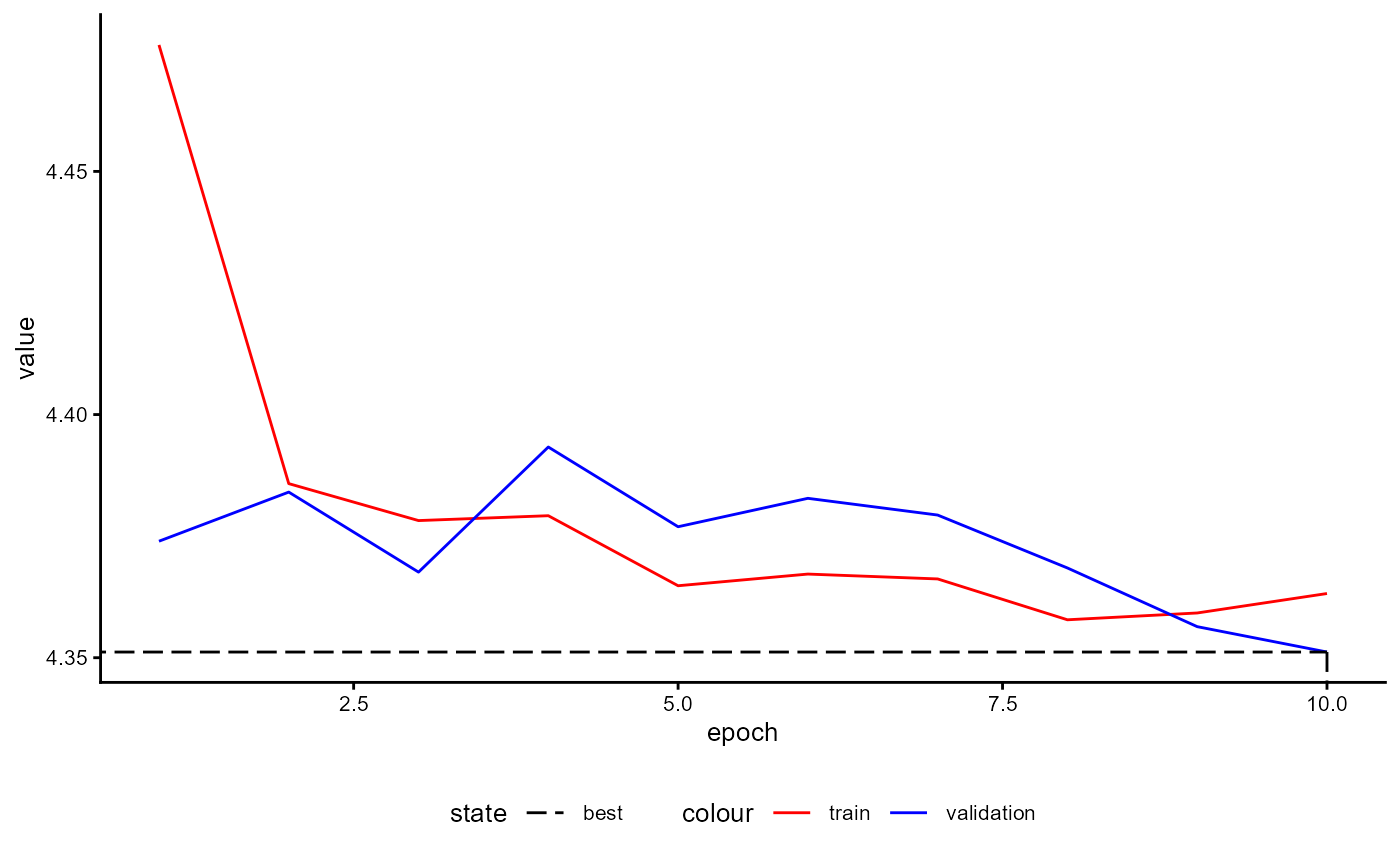

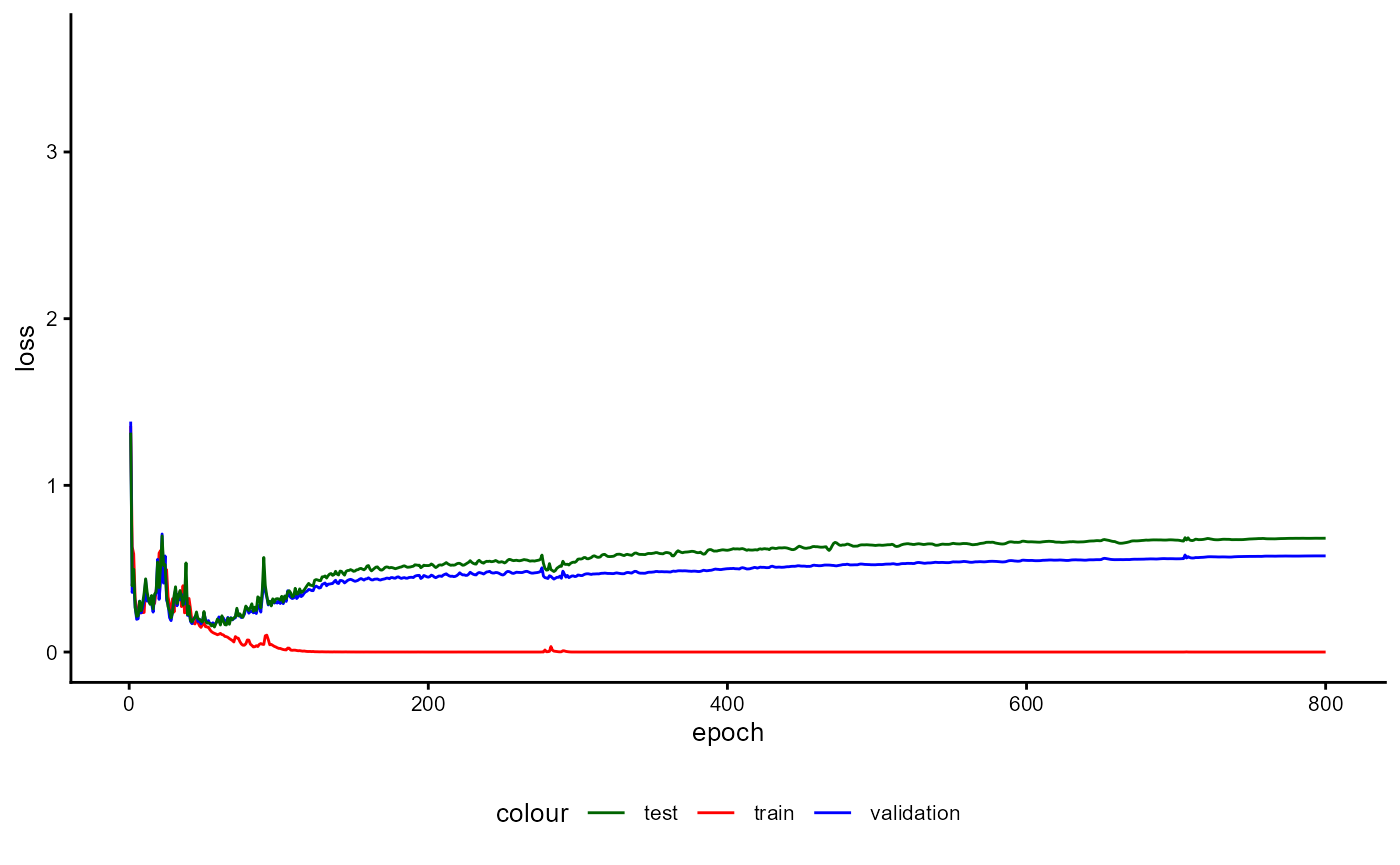

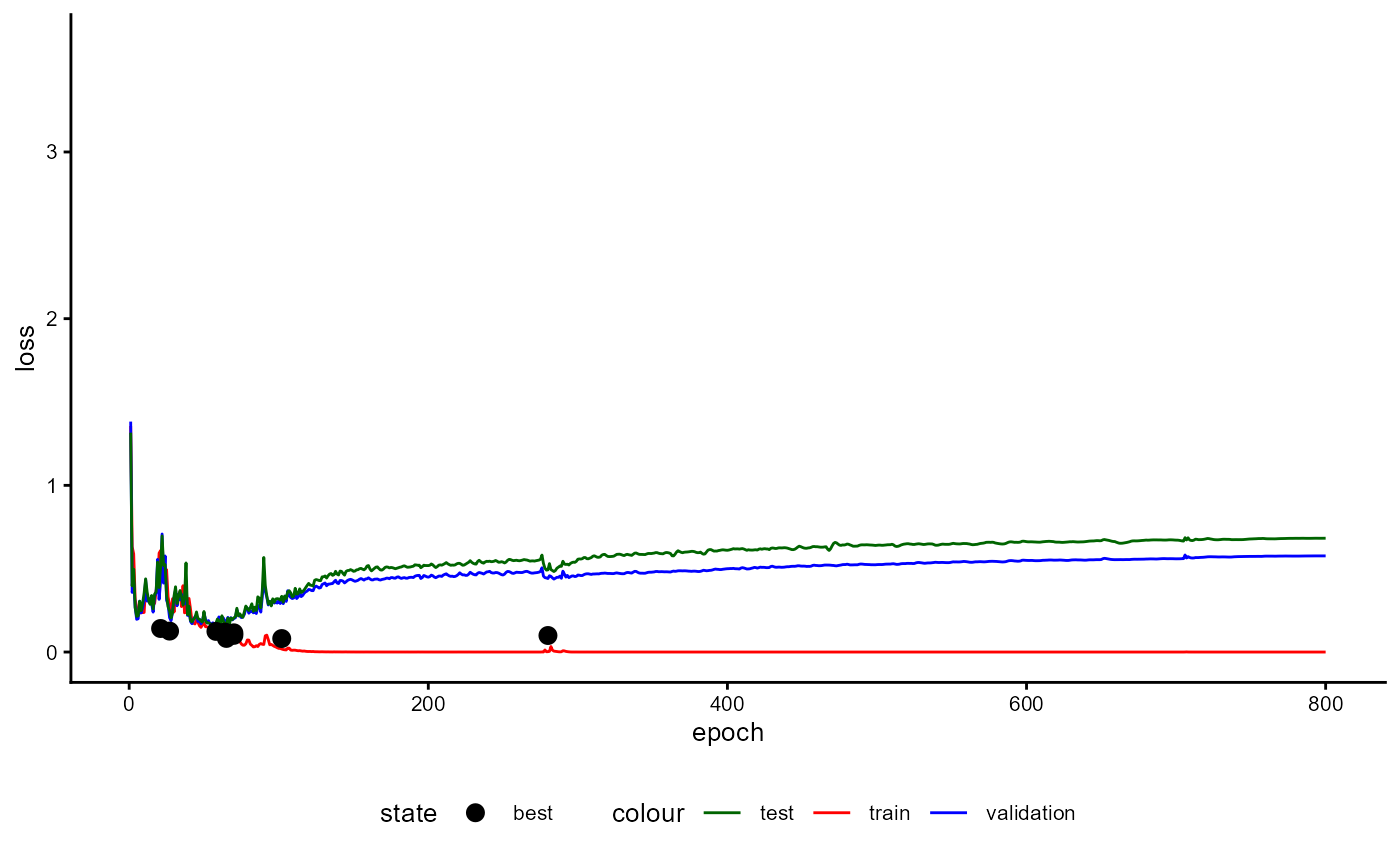

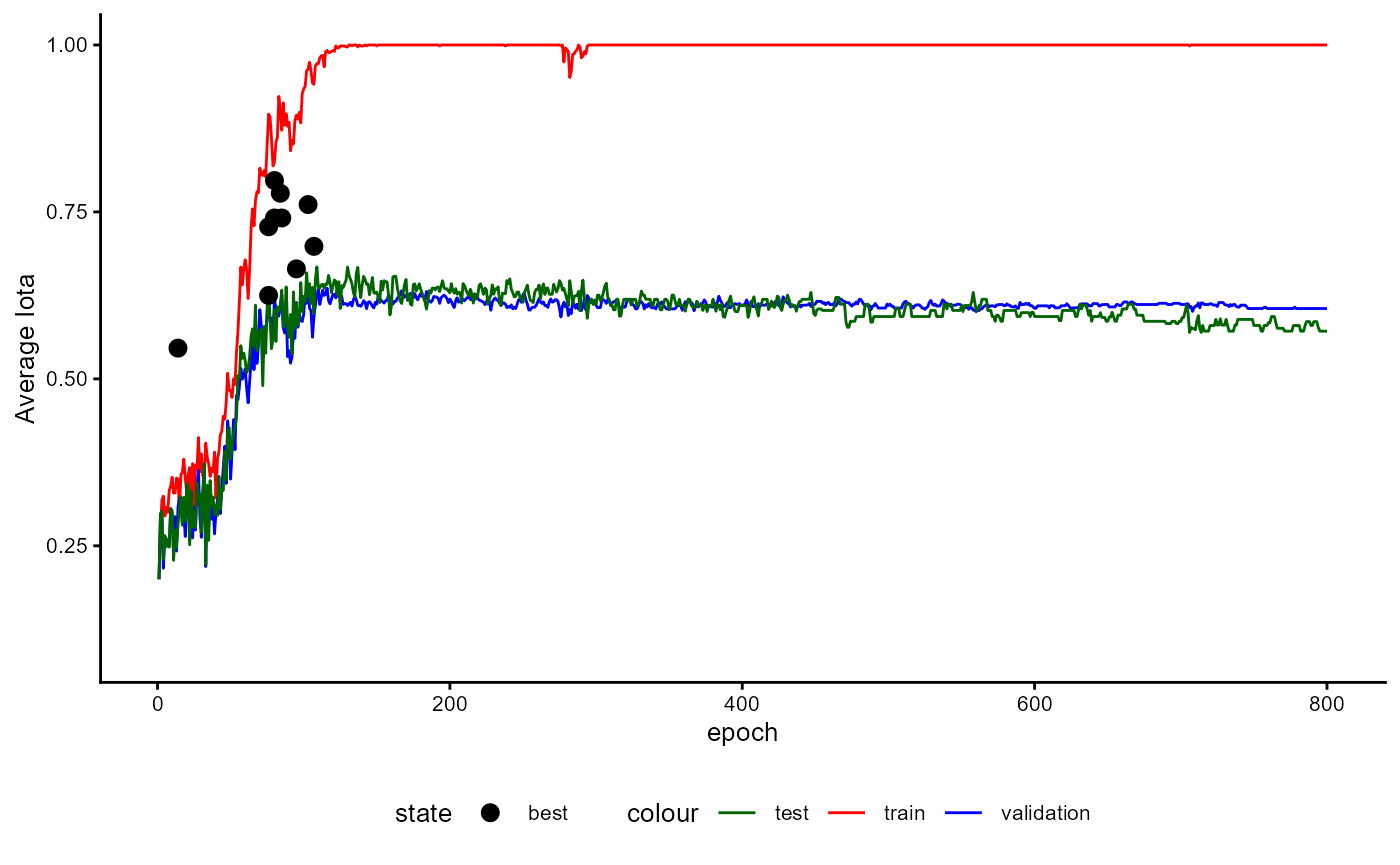

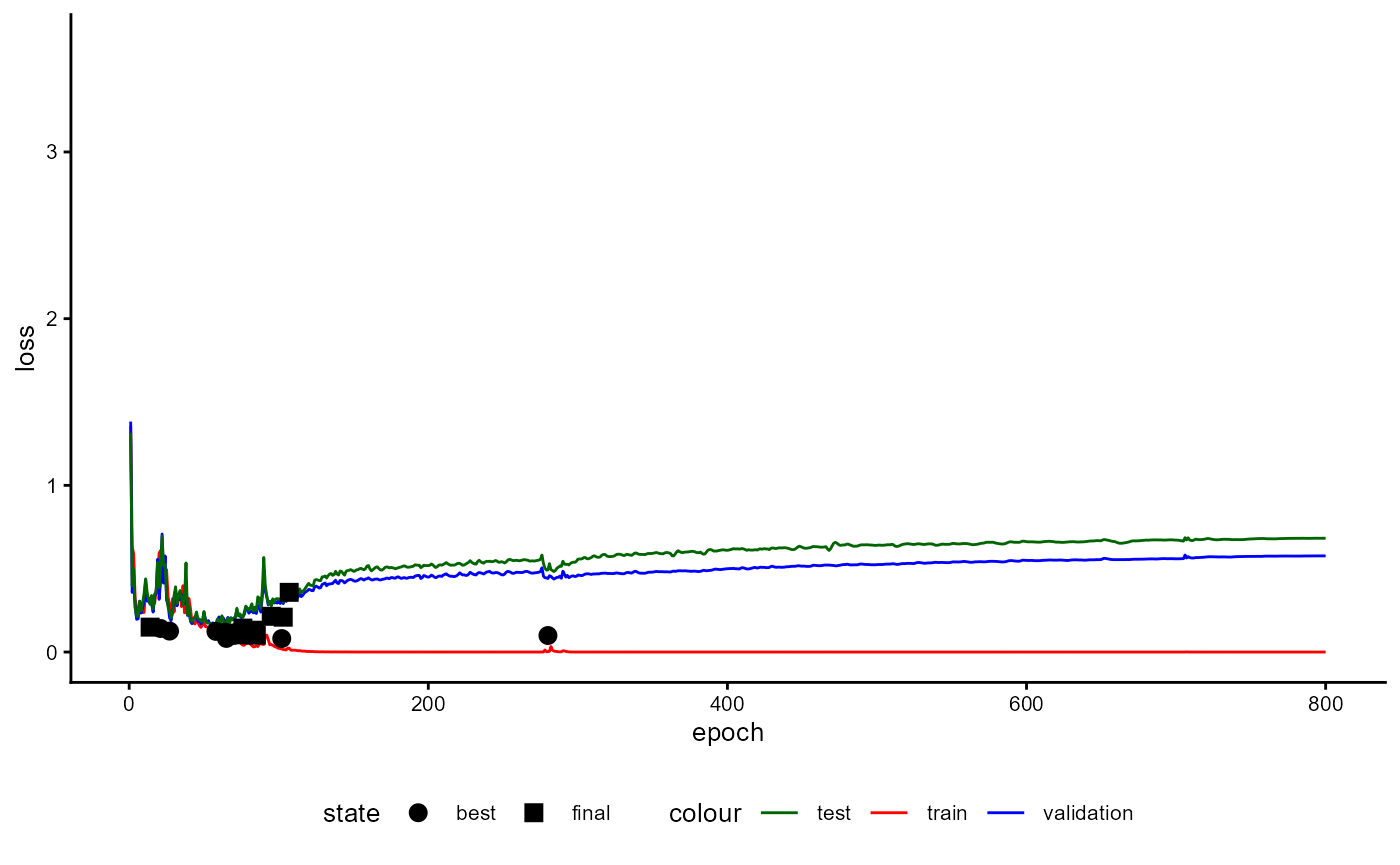

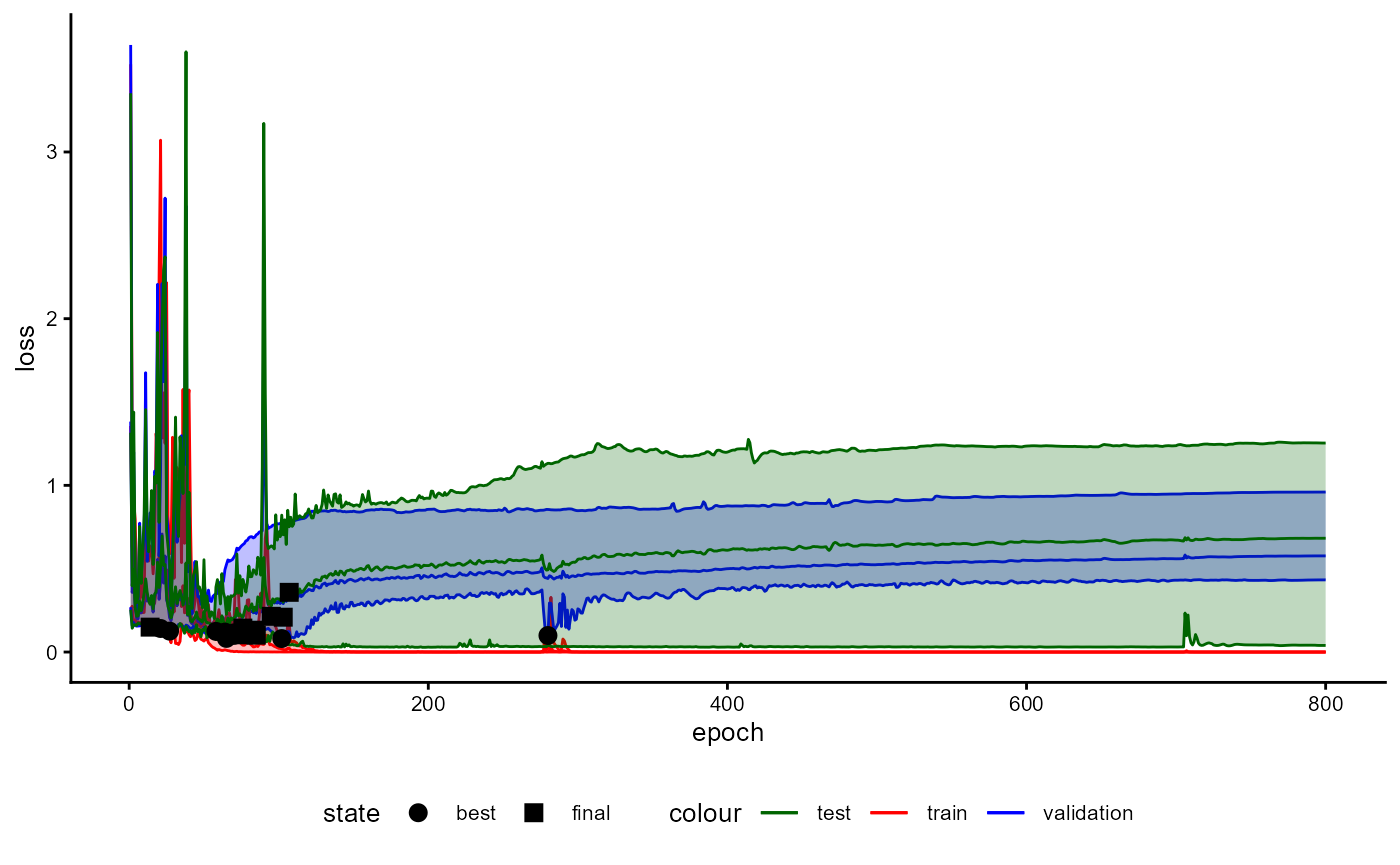

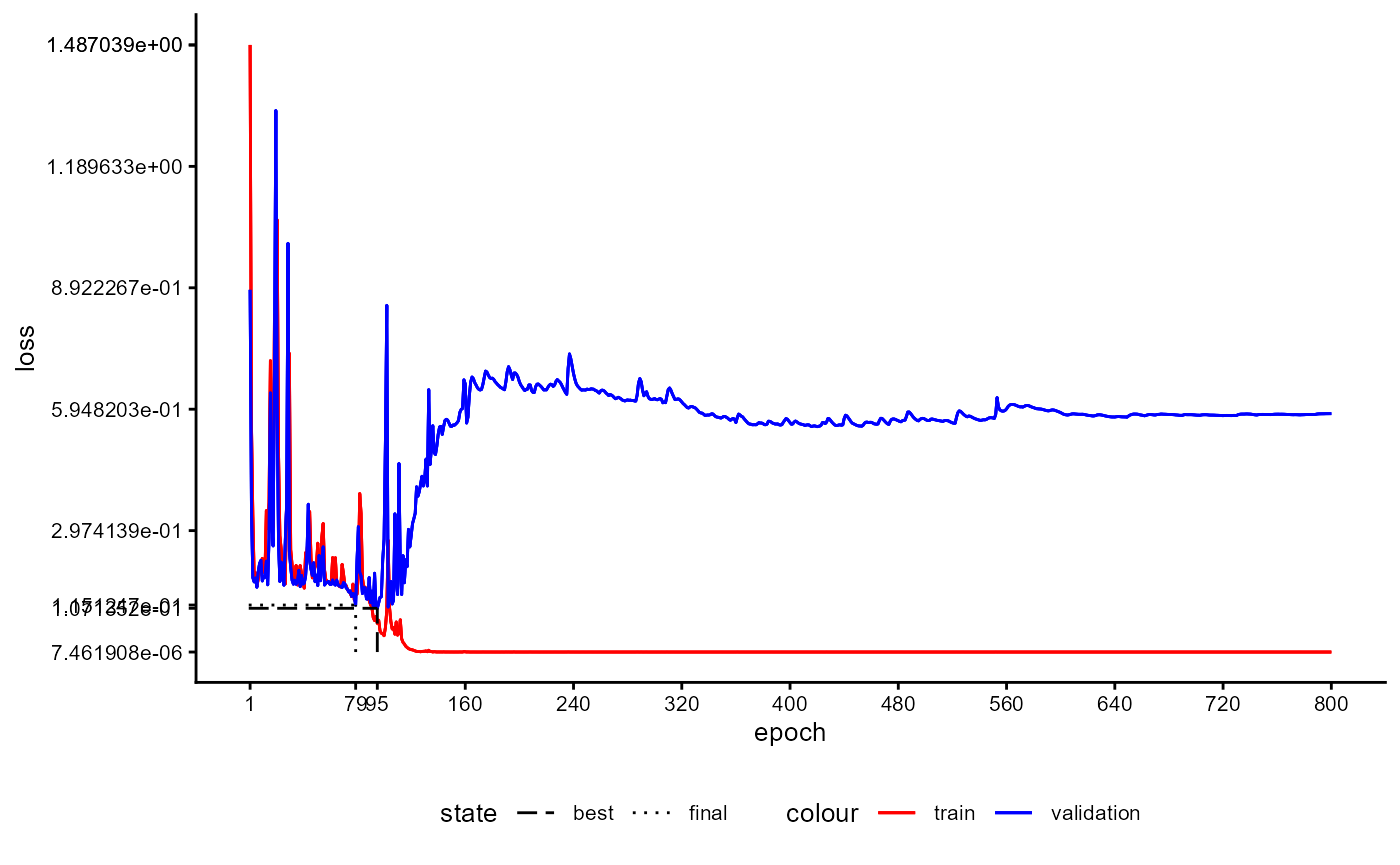

In order to visualize the progress of training you can request a plot

which shows how important performance measures develop over epochs. You

can choose between classifier’s loss ("loss"), accuracy

("accuracy"),

balanced accuracy ("balanced_accuracy"), and average

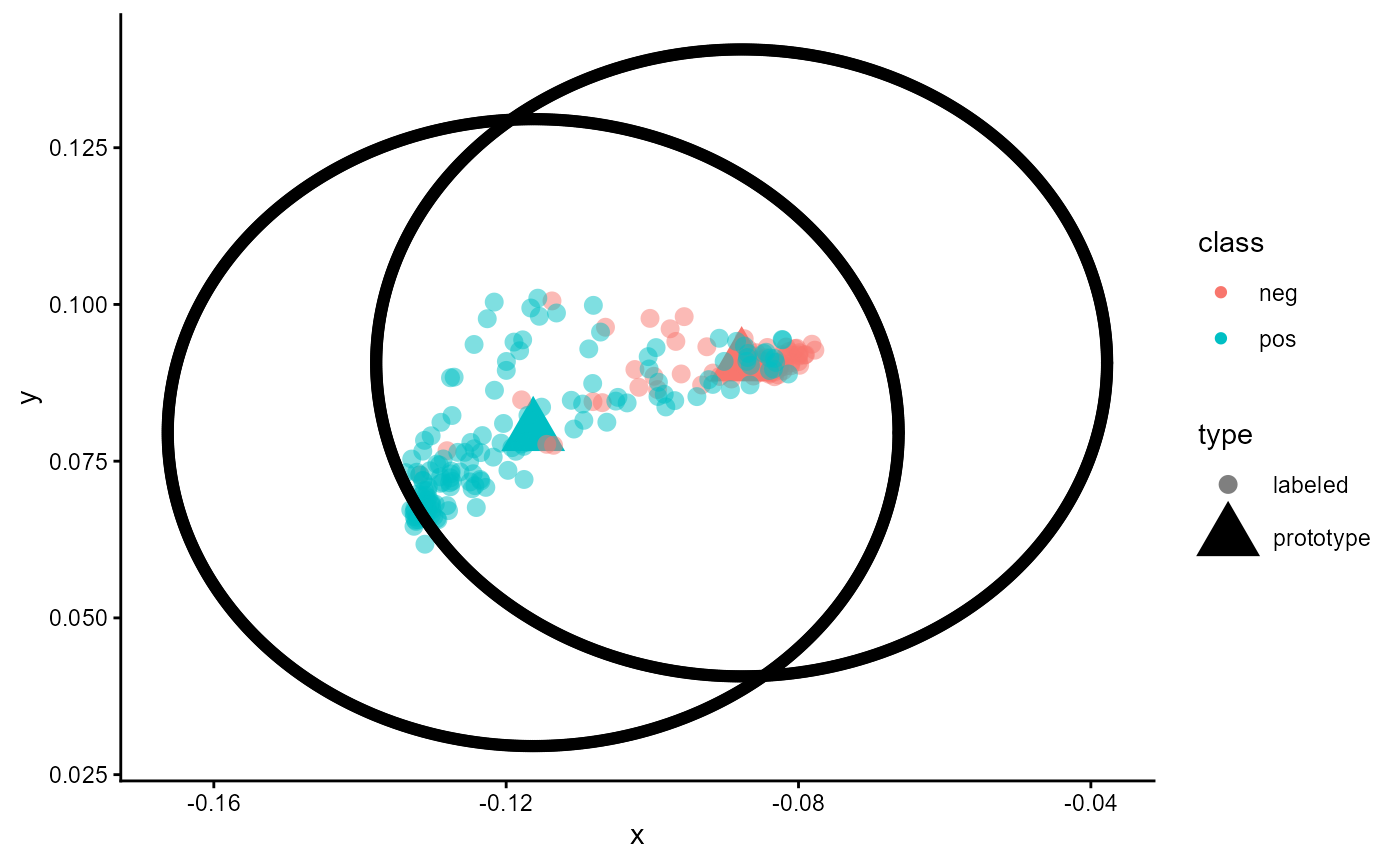

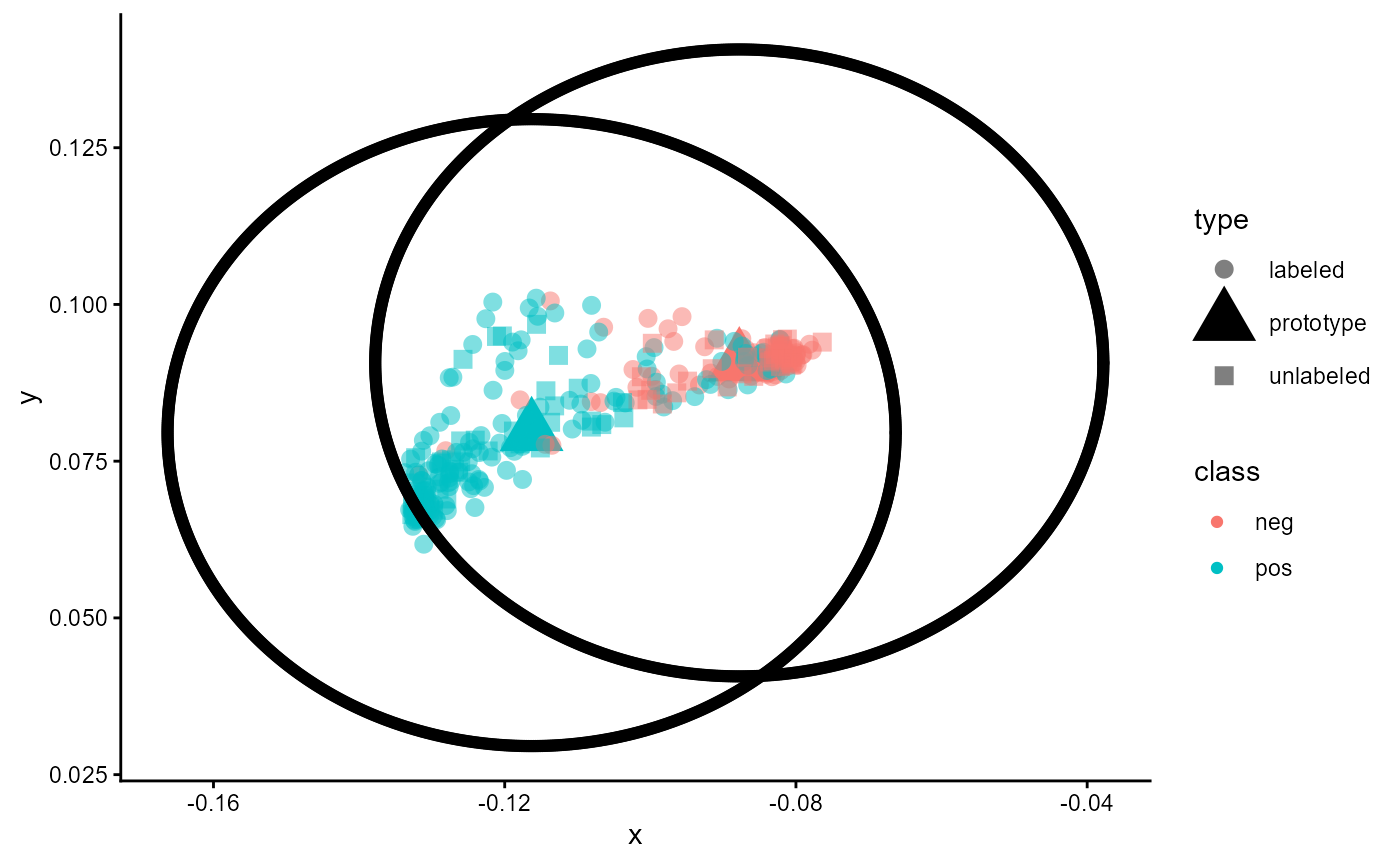

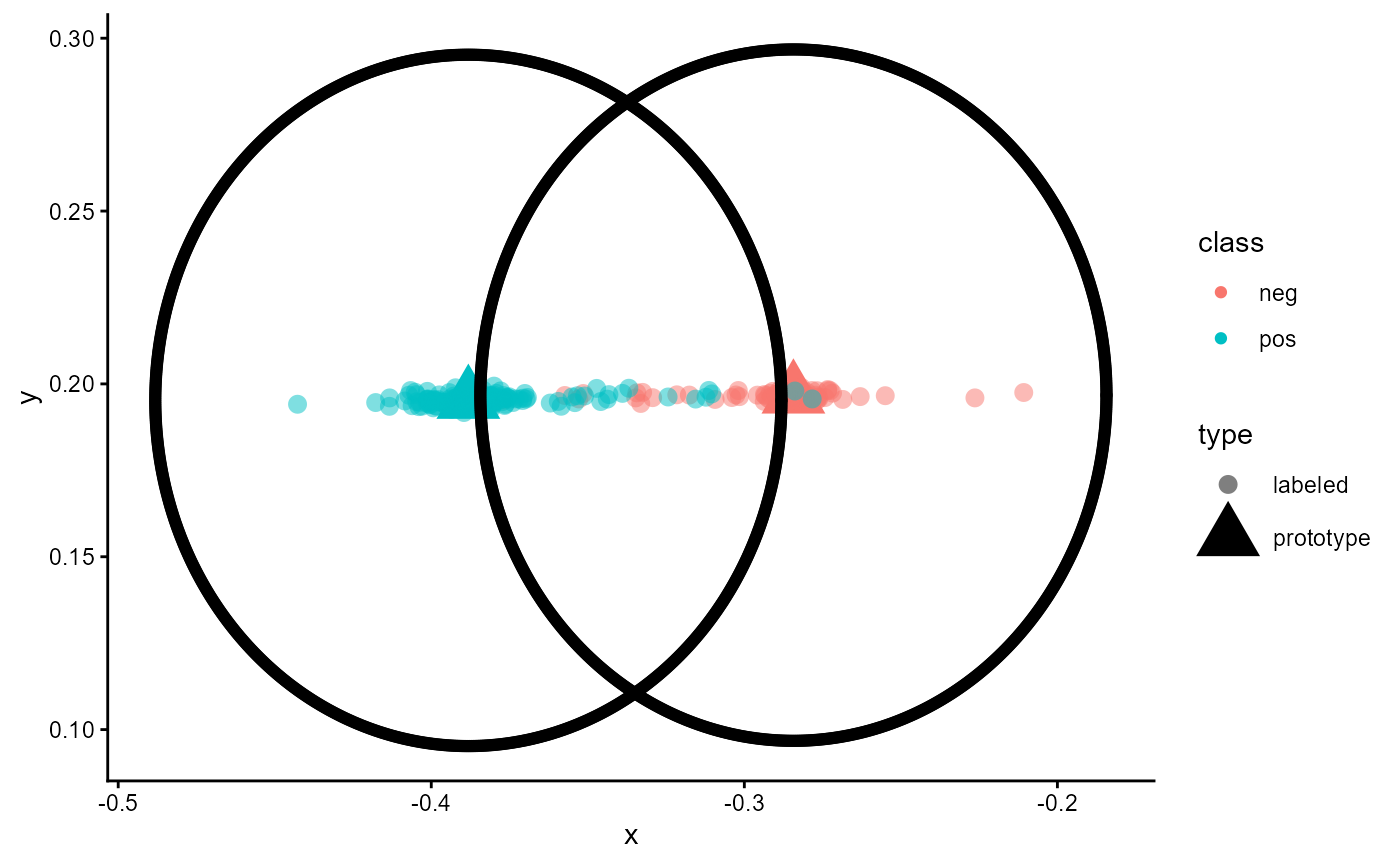

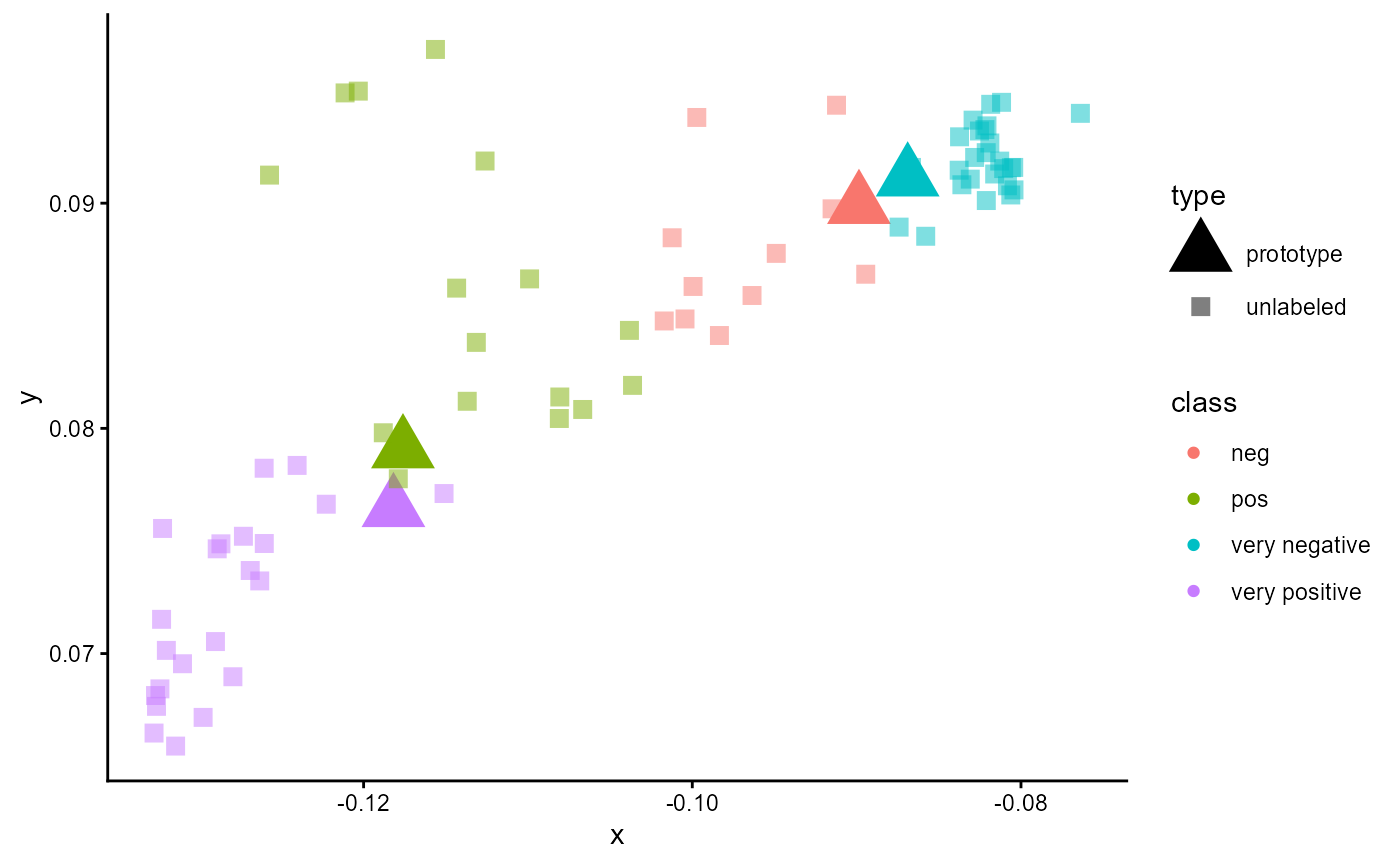

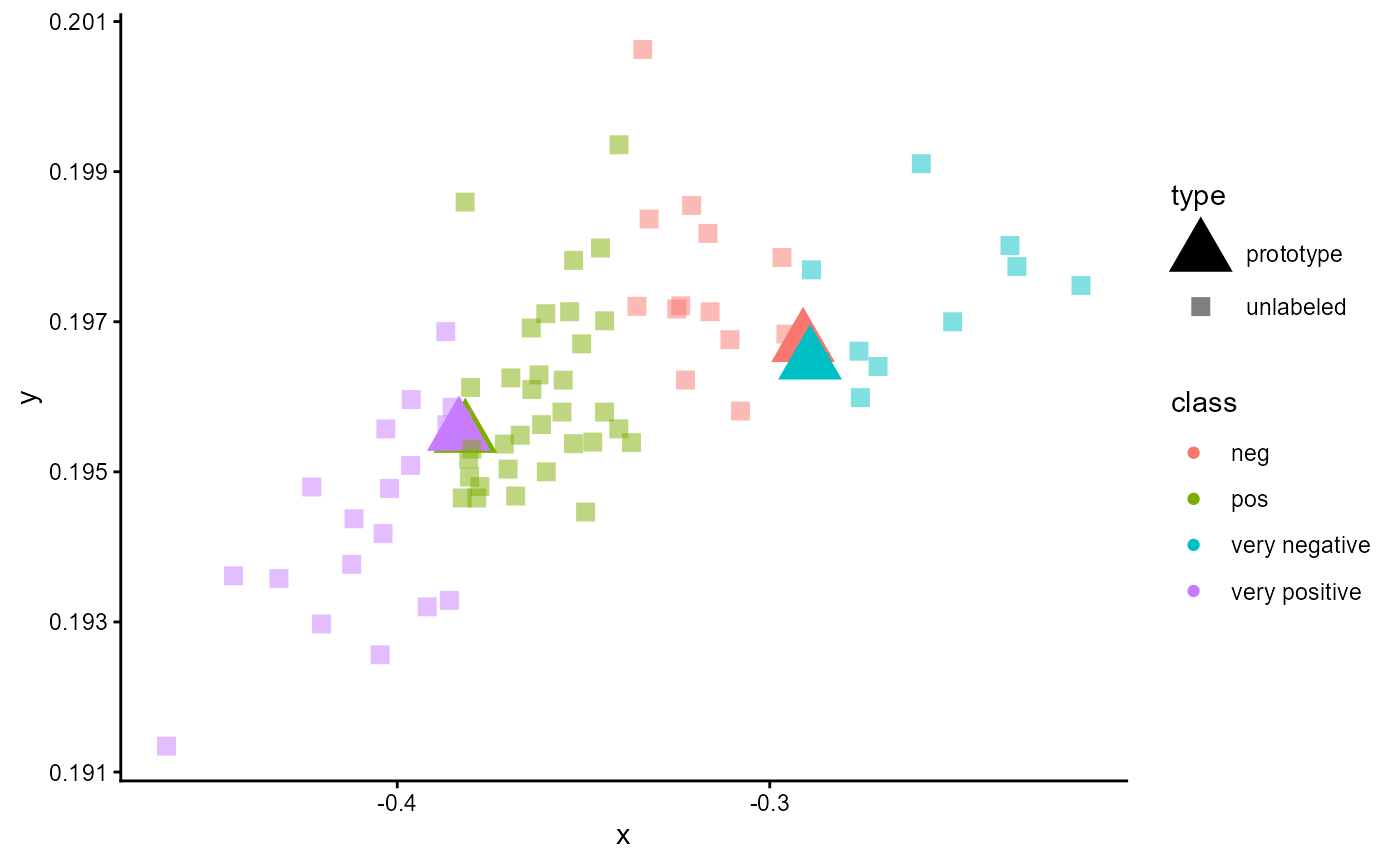

iota("avg_iota"). Set final_training=FALSE to